The Lone C++ Coder's Blog from The Lone C++ Coder's Blog

My apologies for the sudden instability of my blog. I’ve managed to make a hash of an update on the main Wordpress site when trying to update to a newer PHP version and had to switch to the Jekyll “backup” site that isn’t quite production ready yet. Comments will be available in due course, at the moment I’m trying to get the static site fully functioning and the various RSS feeds going again.New home page design

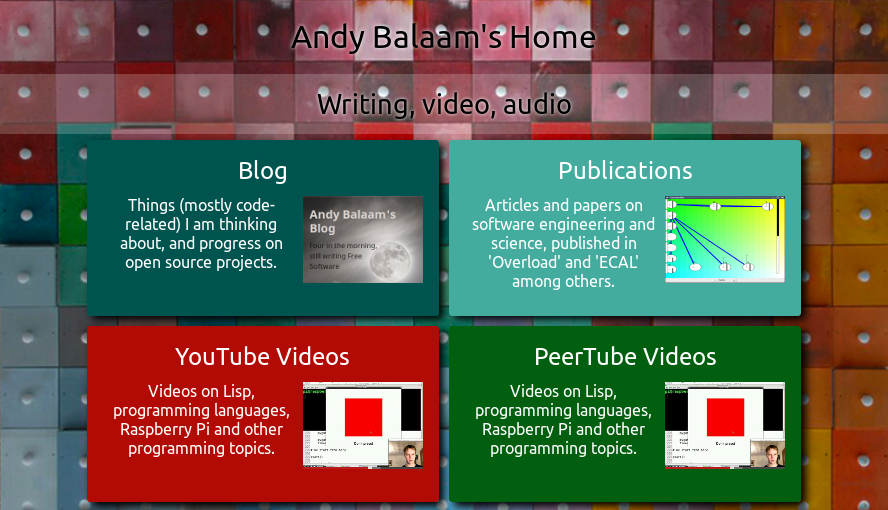

Andy Balaam from Andy Balaam's Blog

After years of getting around to it, I have redesigned my home page at artificialworlds.net.

It’s basically intended to make me look clever or productive or interesting or something. Alternatively, it gives you somewhere to find that thing you know I made but can’t find the link:

The background image is “Read where you are” by delaram bayat.

I am pleased with the page’s responsive design, clarity, fast page-load, and colourfulness.

What do you think?

Distorting the input profile, to stress test a program

Derek Jones from The Shape of Code

A fault is experienced in software when there is a mistake in the code, and a program is fed the input values needed for this mistake to generate faulty behavior.

There is suggestive evidence that the distribution of coding mistakes and inputs generating fault experiences both have an influence of fault discovery.

How might these coding mistakes be found?

Testing is one technique, it involves feeding inputs into a program and checking the resulting behavior. What are ‘good’ input values, i.e., values most likely to discover problems? There is no shortage of advice for manually writing tests, suggesting how to select input values, but automatic generation of inputs is often somewhat random (relying on quantity over quality).

Probabilistic grammar driven test generators are trivial to implement. The hard part is tuning the rules and the probability of them being applied.

In most situations an important design aim, when creating a grammar, is to have one rule for each construct, e.g., all arithmetic, logical and boolean expressions are handled by a single expression rule. When generating tests, it does not always make sense to follow this rule; for instance, logical and boolean expressions are much more common in conditional expressions (e.g., controlling an if-statement), than other contexts (e.g., assignment). If the intent is to mimic typical user input values, then the probability of generating a particular kind of binary operator needs to be context dependent; this might be done by having context dependent rules or by switching the selection probabilities by context.

Given a grammar for a program’s input (e.g., the language grammar used by a compiler), decisions have to be made about the probability of each rule triggering. One way of obtaining realistic values is to parse existing input, counting the number of times each rule triggers. Manually instrumenting a grammar to do this is a tedious process, but tool support is now available.

Once a grammar has been instrumented with probabilities, it can be used to generate tests.

Probabilities based on existing input will have the characteristics of that input. A recent paper on this topic (which prompted this post) suggests inverting rule probabilities, so that common becomes rare and vice versa; the idea is that this will maximise the likelihood of a fault being experienced (the assumption is that rarely occurring input will exercise rarely executed code, and such code is more likely to contain mistakes than frequently executed code).

I would go along with the assumption about rarely executed code having a greater probability of containing a mistake, but I don’t think this is the best test generation strategy.

Companies are only interested in fixing the coding mistakes that are likely to result of a fault being experienced by a customer. It is a waste of resources to fix a mistake that will never result in a fault experienced by a customer.

What input is likely to interact with coding mistakes to be the root cause of faults experienced by a customer? I have no good answer to this question. But, given there are customer input contains patterns (at least in the world of source code, and I’m told in other application domains), I would generate test cases that are very similar to existing input, but with one sub-characteristic changed.

In the academic world the incentive is to publish papers reporting loads-of-faults-found, the more the merrier. Papers reporting only a few faults are obviously using inferior techniques. I understand this incentive, but fixing problems costs money and companies want a customer oriented rationale before they will invest in fixing problems before they are reported.

The availability of tools that automate the profiling of a program’s existing input, followed by the generation of input having slightly, or very, different characteristics make it easier to answer some very tough questions about program behavior.

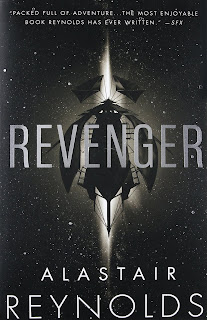

Alastair Reynolds is on form with this steampunk meets pirates space opera.

Paul Grenyer from Paul Grenyer

RevengerAlastair Reynolds

ISBN-13: 978-0575090552

Alastair Reynolds is on form with this steampunk meets pirates space opera.

The story is cliched and almost totally predictable, but very enjoyable at the same time. I’ve started wondering a lot recently, if they body count in such stories is worth the life of the person who is being rescued and I think that remains to be seen in the sequel.

Fura Ness is extremely driven and I struggled to understand a lot of her decisions.

As with much of Reynolds’ work, there is no explanation for why this universe is the way it is and it feels as strange as when the clock strikes 13 in 1984, but makes me want to read more in the hope of understanding.

While most of the story is linear and complete, there’s a large chunk towards the end which feels missing. The climax is a little brief and just like in terminal world there is suddenly a lot of new plot in the final chapter.

Where the story goes next will very interesting.

Finally On Natural Analogarithms – student

student from thus spake a.k.

We proceeded to define functions of such numbers by applying operations of linear algebra to their ℓ-space vectors; firstly with their magnitudes and secondly with their inner products. This time, I shall report upon our explorations of the last operation that we have taken into consideration; the products of matrices and vectors.

Simple data structures

Arne Mertz from Simplify C++!

Keep simple data structures simple! There’s no need for artificial pseudo-encapsulation when all you have is a bunch of data. Recently I have come across a class that looked similar […]

The post Simple data structures appeared first on Simplify C++!.

Code Like a Girl T-shirts

Andy Balaam from Andy Balaam's Blog

There are lots of people missing from the programming world: lots of the programmers I meet look and sound a lot like me. I’d really like it if this amazing job were open to a lot more people.

One of the weird things that has happened is that somehow we seem to have the idea that programming is only for boys, and I’d like to fight against that idea by wearing a t-shirt demonstrating how cool I think it is to be a woman coder.

So, I commissioned a design from an amazing artist called Ellie Mars, who I found through her Mastodon.art page @elliemars@mastodon.art. She did an amazing job, sending sketches and ideas back and forth, and finally she came up with this awesome design:

I’ve printed a t-shirt for myself that I will give myself for Christmas, and I’ve made a page on Street Shirts so you can get one too!

I’ve uploaded 2 designs, but if you’d like me to set something different up for you, let me know. Also, these links will expire, but I can re-set them up for you if you contact me. They are reasonably cheap:

To ask for different designs or an unexpired link, you are welcome to contact me (via DM or publicly) on twitter @andybalaam or Mastodon @andybalaam@mastodon.social, or by email, via a short test.

Ellie and I agreed to set up these t-shirts sales with no profit for us because we’d like to get the word out. If they are popular we might add a little, so get in fast for a good deal!

10 points for anyone who can recognise the code in the background. It’s from one of my favourite programs.

Personally, I think we all spend too much of our time walking around advertising faceless corporations when we could be saying something a bit more useful on our clothes. What do you think of this idea? Maybe you could design a similar t-shirt? Let me know your thoughts in the comments below or on Twitter or Mastodon.

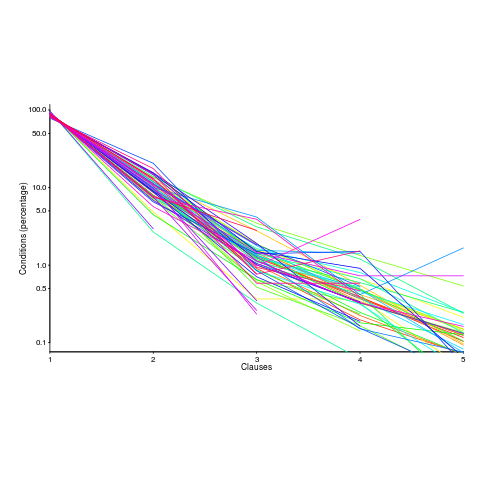

Growth of conditional complexity with file size

Derek Jones from The Shape of Code

Conditional statements are a fundamental constituent of programs. Conditions are driven by the requirements of the problem being solved, e.g., if the water level is below the minimum, then add more water. As the problem being solved gets more complicated, dependencies between subproblems grow, requiring an increasing number of situations to be checked.

A condition contains one or more clauses, e.g., a single clause in: if (a==1), and two clauses in: if ((x==y) && (z==3)); a condition also appears as the termination test in a for-loop.

How many conditions containing one clause will a 10,000 line program contain? What will be the distribution of the number of clauses in conditions?

A while back I read a paper studying this problem (“What to expect of predicates: An empirical analysis of predicates in real world programs”; Google currently not finding a copy online, grrr, you will have to hassle the first author: durelli@icmc.usp.br, or perhaps it will get added to a list of favorite publications {be nice, they did publish some very interesting data}) it contained a table of numbers and yesterday my analysis of the data revealed a surprising pattern.

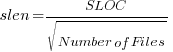

The data consists of SLOC, number of files and number of conditions containing a given number of clauses, for 63 Java programs. The following plot shows percentage of conditionals containing a given number of clauses (code+data):

The fitted equation, for the number of conditionals containing a given number of clauses, is:

where:  (the coefficient for the fitted regression model is 0.56, but square-root is easier to remember),

(the coefficient for the fitted regression model is 0.56, but square-root is easier to remember),  , and

, and  is the number of clauses.

is the number of clauses.

The fitted regression model is not as good when  or

or  is always used.

is always used.

This equation is an emergent property of the code; simply merging files to increase the average length will not change the distribution of clauses in conditionals.

When  , all conditionals contain the same number of clauses, off to infinity. For the 63 Java programs, the mean

, all conditionals contain the same number of clauses, off to infinity. For the 63 Java programs, the mean  was 2,625, maximum 11,710, and minimum 172.

was 2,625, maximum 11,710, and minimum 172.

I was expecting SLOC to have an impact, but was not expecting number of files to be involved.

What grows with SLOC? Number of global variables and number of dependencies. There are more things available to be checked in larger programs, and an increase in dependencies creates the need to perform more checks. Also, larger programs are likely to contain more special cases, which are likely to involve checking both general and specific values (i.e., more clauses in conditionals); ok, this second sentence is a bit more arm-wavy than the first. The prediction here is that the percentage of global variables appearing in conditions increases with SLOC.

Chopping stuff up into separate files has a moderating effect. Since I did not expect this, I don’t have much else to say.

This model explains 74% of the variance in the data (impressive, if I say so myself). What other factors might be involved? Depth of nesting would be my top candidate.

Removing non-if-statement related conditionals from the count would help clarify things (I don’t expect loop-controlling conditions to be related to amount of code).

Two interesting data-sets in one week, with 10-days still to go until Christmas

The power of small declines

Allan Kelly from Allan Kelly Associates

Last year I wrote about the power of 1% improvement, and how powerful this can be when that improvement occurs frequently. For example, if a team improves 1% a week then over the course of 50 weeks (a year) they would improve by over 62%.

A few days ago I had a revelation: the opposite is also true.

If a team enters a downward spiral then a 1% decline in productivity each week has similar effects but in the opposite direction. In fact, as I think about this I see more and more occasions where a team can loose small amounts of performance which actually saps their productive capacity. Like the frog in hot water they don’t realise they are cooked until it is too late.

The graph above shows what happens to value-add over a year when a team is 1% less productive each week. The blue bars show how value-add falls each week. The red line shows how each week the team declines slightly compared to the week before.

That the red line get higher seems odd but it makes sense: each week the team is 1% less productive than the week before. So at the end of the second week the team is 1% less productive than they were in the first week. At the end of week 50 they are 1% less productive than week 49 but only 0.62% more less productive than week 1 because the 1% decline was from a lower total. Getting worse slows down because the team are worse!

At some point the value-add ceases to justify the cost of the team. But as these changes are very gradual that is going to be hard to see.

Why might this happen? – lots of reasons

First off there are the corporate drains on productivity. Consider corporate security processes: think about passwords alone, the need to change passwords regularly, have longer and longer passwords, have different passwords on different systems, and so on. Sure cyber security is important but it can also be a drain on productivity.

Then there are the other hassles of working almost anywhere: finding meeting rooms, booking meeting rooms, setting up webex conference calls, “cake in the kitchenâ€, restrictions on internet use – whether it is limited access, site blacklists, or authorised “white list†sites only.

It is easy to see how a large corporate can gradually drain a team. But there are other reasons.

There are personal drains on productivity too. Consider internet use during work time. The likes of Facebook, LinkedIn and Twitter which aim to keep you on their sites as long as possible. Using LinkedIn is almost a necessity in modern work – got a meeting coming up? someone applied for a job? looking for a lead? – but once your in, Microsoft wants to keep you there.

Then think about your code base: is the code getting better or worse?

- Easier to work with or harder to work with?

- Do you write an automated test for every change? Or save time today at the cost of time tomorrow, and the next day, and the day after, and …

- Do you take time to refactor every time you make a change? or are you constantly kludging it and making the next change slightly harder?

Notice here I’m not talking about those big time consuming changes that happen occasionally: new employees, reorganisations, mergers – things that happen occasionally, take a chunk of time but finish.

So, is your work environment getting a little bit better every week? or a little bit harder?

If we think at the “very little†level it is unlikely that things are the same as last week. Staying the same will be hard. Things are probably a little bit better or a little bit harder. Extend that over a year and – as the theory of 1% change shows – things are a lot worse, or maybe a lot better.

What is important is the trend, and the trend is going to be set by the culture. Do you have a culture of small improvements? Or an acceptance of small degradation?

Finally, because there are so many minor factors that can sap your productivity capacity then it is quite likely that if you aren’t getting more productive then you are getting less. In other words, you need to be working to improve just to stand still.

The post The power of small declines appeared first on Allan Kelly Associates.

“Hello World†Stories

Chris Oldwood from The OldWood Thing

I’ve always tried really hard to fight against “technical storiesâ€. These are supposedly user stories but which are really framed as a solution to a problem and really just technical tasks. In “Turning Technical Tasks Into User Stories†I looked at how it’s often possible to elevate these from an obvious solution to a problem back up to a problem which needs to be solved. At this point you may discover there are other, hopefully cheaper, solutions to the problem which have been missed in the original analysis either because things have changed or different people are doing the thinking.

On the flip-side there are occasionally times where, after having looked at a few related stories, it’s apparent that they all require the same underlying mechanism to work. One common solution to this is to bulk up the first story with the technical work and let the rest flow through as normal. This way you have no technical work on your backlog per-se as it’s all hidden in the stories.

Transparency

What I don’t like about this approach is that one story arbitrarily gets hit with a load of extra work, which, if you’re using historical data to stick a finger in the air for estimation of similar work later, skews the average somewhat. It also means that from a visibility perspective one story takes longer while the mechanism is being built.

One way I’ve found to address this has been to pull out the bare bones of the technical work into a “Hello, World!†story [1]. This story is framed around building the skeleton of the mechanism that will be used to drive the implementation of the subsequent features. The aim is keep the scope minimal enough that we avoid speculating while still delivering something which stands on its own two feet and remains clearly visible on the board.

Value Proposition

While the value to the end-user is in the eventual feature, the value in the mechanism is proving to the development team that the basic approach seems sound. With the skeleton built, the idiosyncrasies around each individual feature can then be dealt with appropriately at the right time and accounted for in the usual way.

To be clear this is not about doing a spike or building a prototype, although that may have happened earlier to gain the knowledge needed to undertake this piece of work. No, here we’re talking about building the bare bones of a real mechanism along with the most basic feature possible.

The reason I’ve called these “Hello World†Stories is probably self-evident, it alludes to the classic program many have chosen as their first – to write “Hello, World!†to the console. In this context the name is intended to conjure up simplicity and remind us that what we’re doing is delivering the minimum required to make the platform viable. We probably won’t literally write “Hello, World!†to the console, but it may a log message instead that we can then observe and monitor, or be a message on a queue that we can see discarded. Essentially whatever we can do to make its effects observable without wasting any real effort or leaving it partially complete.

Based on the classic INVEST acronym we should strive to make every unit of work: Independent, Negotiable, Valuable, Estimable, Small and Testable. By splitting it out from one of the arbitrary features it becomes more independent, negotiable, estimable and small which can be useful should short-term priorities change. And by extending the scope from a pure mechanism just a little bit further to the most trivial feature possible we make it more testable from a technical perspective, even if not from a product viewpoint. Most importantly, however, is it valuable in its own right? I think sometimes splitting the mechanism out gives value by making the I,N,E,S and T more tangible. In particular breaking work down into smaller deliverable units is often the most valuable practice even if occasionally the end-user has nothing initially to show for it.

Ultimately, I guess, I can’t ever remember anyone complaining they had broken their work down into pieces that were so small they were too visible.

[1] I’m sure there is an argument about this not being a “story†per-se but just a “taskâ€. However I prefer to call it a story because our “Hello, World!†realization should have a grounding in the real world, even if it is more abstract than what the end-user will eventually receive.

[2] There is an assumption here that we’ve already decided we cannot or do not want to solve the dependent features in different ways, probably because it would be far more costly (in the long run) than briefly delaying them by building a common pillar.