Derek Jones from The Shape of Code

I was at the Wikihouse hackathon on Wednesday. Wikihouse is an open-source project involving prefabricated house designs and building processes.

Why is a software guy attending what looks like a very non-software event? The event organizers listed software developer as one of the attendee skill sets. Also, I have been following the blog Construction Physics, where Brian Potter has been trying to work out why the efficiency of building construction has not significantly improved over many decades; the approach is wide-ranging, data driven and has parallels with my analysis of software engineering. I counted four software people at the event, out of 30’ish attendees; Sidd I knew from previous hacks.

Building construction shares some characteristics with software development. In particular, projects are bespoke, but constructed using subcomponents that are variations on those used in most other projects of the same kind of building, e.g., walls±window frames, floors/ceilings.

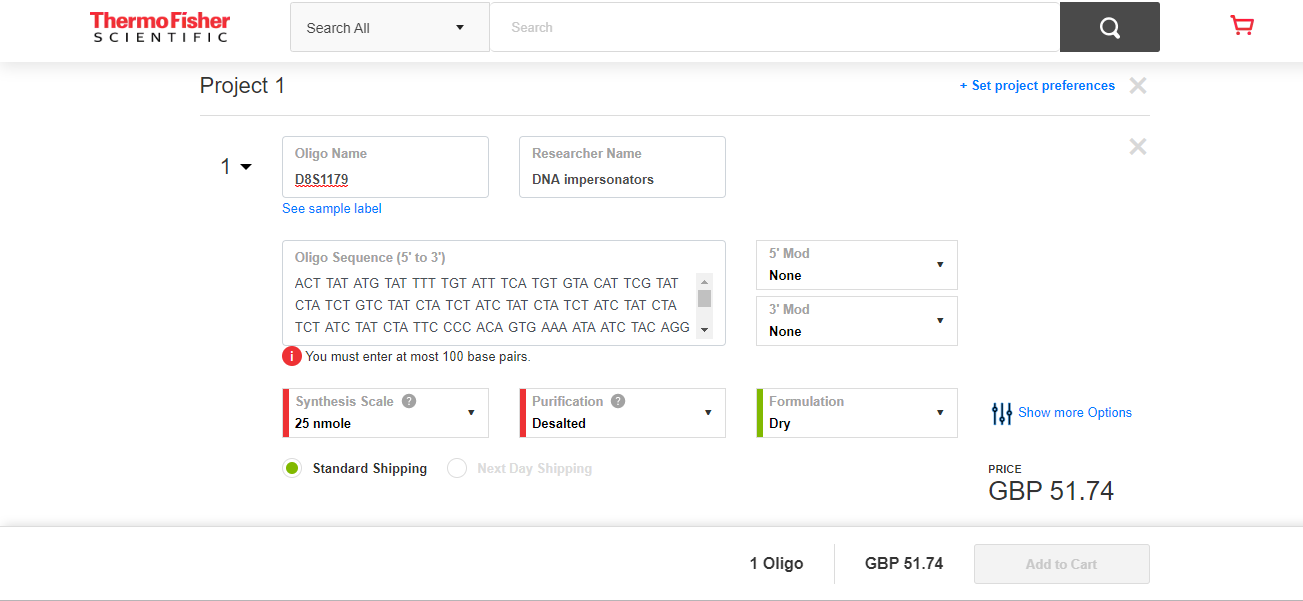

The Wikihouse design and build process is based around a collection of standardised, prefabricated subcomponents, called blocks; these are made from plywood, slotted together, and held in place using butterfly/bow-tie joints (wood has a negative carbon footprint). A library of blocks is available, with the page for each block including a DXF cutting file, assembly manual, 3-D model, and costing; there is a design kit for building a house, including a spreadsheet for costing, and a variety of How-Tos. All this is available under an open source license. The Open Systems Lab is implementing building design software and turning planning codes into code.

Not knowing anything about building construction, I have no way of judging the claims made during the hackathon introductory presentations, e.g., cost savings, speed of build, strength of building, expected lifetime, etc.

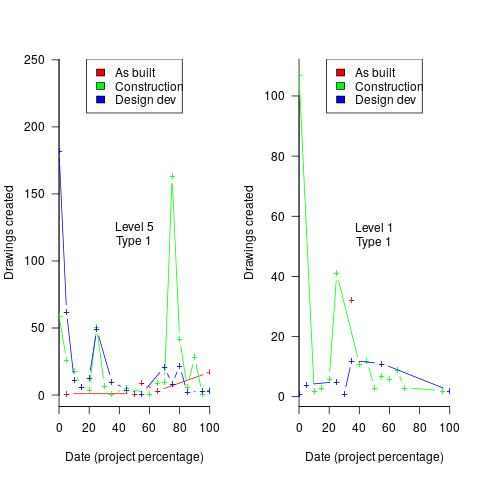

Constructing lots of buildings using Wikihouse blocks could produce an interesting dataset (provided those doing the construction take the time to record things). Questions such as: how does construction time vary by team composition (self-build is possible) and experience, and by number of rooms and their size spring to mind (no construction time/team data was recorded during the construction of the ‘beta test’ buildings).

The morning was taken up with what was essentially a product pitch, then we got shown around the ‘beta test’ buildings (they feel bigger on the inside), lunch and finally a few hours hacking. The help they wanted from software people was in connecting together some of the data/tools they had created, but with only a few hours available there was little that could be done (my input was some suggestions on construction learning curves and a few people/groups I knew doing construction data analysis)

Will an open source approach enable the Wikihouse project to succeed with its prefabricated approach to building construction, where closed source companies have failed when using this approach, e.g., Katerra?

Part of the reason that open source software succeeded was that it provided good enough functionality to startup projects/companies who could not afford to pay for software (in some cases the open source tools provided superior functionality). Some of these companies grew to be significant players, convincing others that open source was viable for production work. Source code availability allows developers to use it without needing to involve management, and plenty of managers have been surprised to find out how embedded open source software is within their group/organization.

Buildings are not like software, lots of people with some kind of power notice when a new building appears. Buildings need to be connected to services such as water, gas and electricity, and they have a rateable value which the local council is keen to collect. Land is needed to build on, and there are a whole host of permissions and certificates that need to be obtained before starting to build and eventually moving in. Doing it, and telling people later is not an option, at least in the UK.