Derek Jones from The Shape of Code

Assume you are responsible for two teams who independently work on projects, say Team A and Team B. The teams have different work completion rates, with Team A completing work at the rate of 70 widgets per week, while Team B completes 30 widgets per week. Both teams always work on projects that require the completion of the same number of widgets.

You have the resources to send just one of the teams on a course. It is predicted that sending Team A on the course would improve their performance to 110 widgets per week, while attending the course would improve the performance of Team B to 40 widgets per week.

Senior management have decreed that time to market is the metric by which project managers are judged.

You want to impress senior management by significantly improving time to market for your projects; which team do you send on the course (i.e., the one that is likely to experience the largest reduction in time to market)?

This question is a restatement of a one involving cars travelling at different speeds, that has grown into a niche research area. Studies have found that a large percentage of subjects give the wrong answer, and they are said to have a time-saving bias, or time-loss bias.

The inability to correctly process “inverse variables” has been given as the reason people tend to give the wrong answer. The term “inverse variables” comes from the formula for calculating completion time, where the velocity appears as the denominator. Another way of looking at this problem is that when going slowly, there is more scope for improvement, compared to when going much faster.

A speed increase from 30 to 40 is only 10, or a 33% improvement; while an increase from 70 to 110 is an increase of 40, or 57%. Based on these numbers, Team A should be sent on the course.

However, we are interested in time to market. Let’s assume that both teams have to complete a project requiring 100 widgets. Before attending the course, Team A completes 100 widgets in 100/70=1.4 weeks, and Team B completes 100 widgets in 100/30=3.3 weeks. After attending the course, Team A would complete 100 widgets in 100/110=0.91 weeks, and Team B would complete 100 widgets in 100/40=2.5 weeks. Time to market for Team A has been reduced by (1.4-0.9)=0.5 weeks, while the reduction for Team B is (3.3-2.5)=0.8 weeks. So sending Team B on the course makes you look better, on the time to market metric.

If somebody ran an experiment with project managers, would the subjects tend to incorrectly process “inverse variables”. Well, somebody has done the experiment, and yes, many subjects exhibited the time-saving bias (the experimental scenario described in the appendix is a lot easier to understand than the one in the main body of the paper, which is a mess; Magne Jørgensen continues to be the only person doing interesting experiments in software estimation).

It has become common practice that, when a large percentage of subjects in a psychology experiment respond in ways that are inconsistent with a mathematical approach, the behavior is labelled as being a bias. I think the use of this terminology makes the behavior sound more interesting than it actually is; what’s wrong with saying that people make mistakes. Perhaps labelling experimental responses as being a bias makes it easier to get papers published.

Whether people are biased, or don’t pay enough attention, when solving non-trivial equations, what might be done about it?

This is not about whether any particular metric is a useful one, rather it is about calculating the right answer for whatever metric happens to be chosen.

Would an awareness campaign highlighting the problems people have with “inverse variables” be worthwhile? I don’t think so. Many people have problems with equations, and I don’t see why this case is more worthy of being highlighted than any other.

Am I missing something?

Psychology researchers are interested in figuring out the functioning of the brain/mind, so they are looking for patterns in the responses subjects give. Once someone has published a few papers on a research topic, they become invested in it. If they continue to get funding, the papers keep on coming. Sometimes a niche topic acquires a major following, and the work contributes to a major change of thinking about the mind, e.g., the Wason selection task helped increase the evidence that culture has an impact on cognitive behavior.

I think that software engineering researchers need to carefully evaluate the likely importance of behaviors that psychology researchers have labelled as a bias.

) (

) (

) (

) (

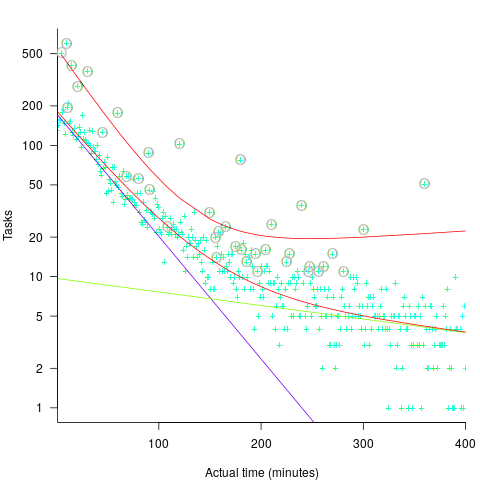

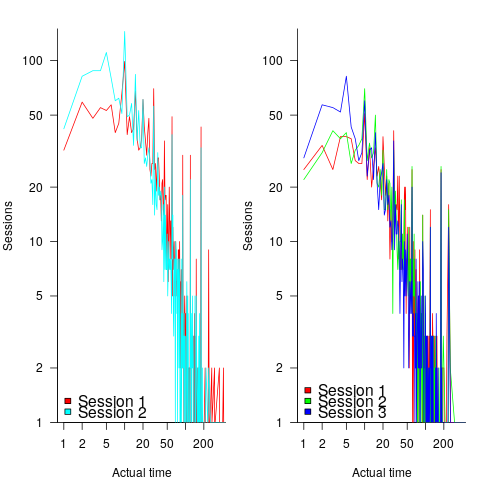

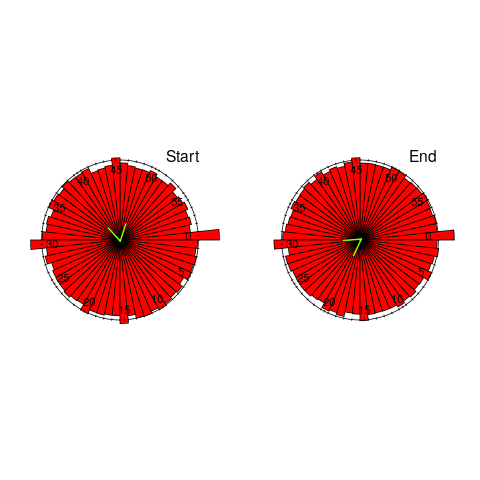

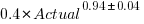

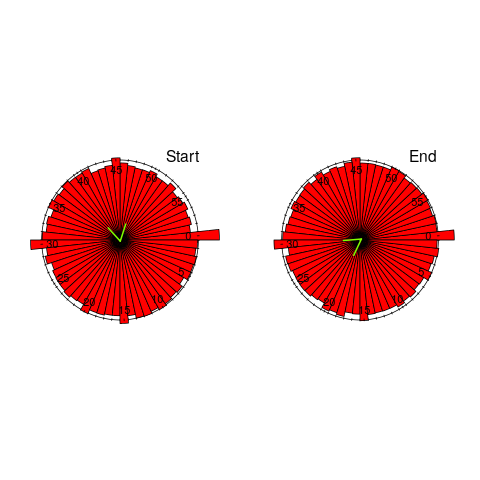

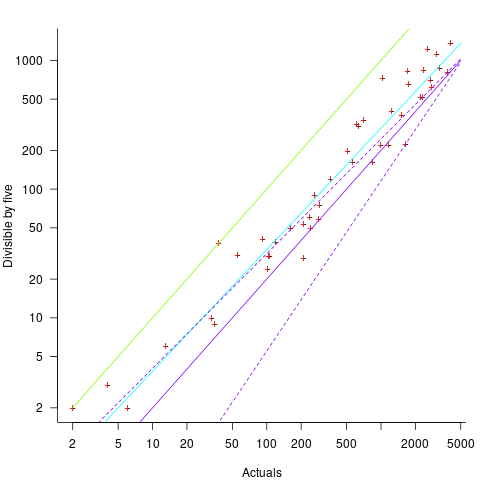

(there is a small variation in the value of the constant; the smallest value for project 615 was probably calculated rather than being human selected).

(there is a small variation in the value of the constant; the smallest value for project 615 was probably calculated rather than being human selected). . I await the data needed to answer this question.

. I await the data needed to answer this question.