The Lone C++ Coder's Blog from The Lone C++ Coder's Blog

I have been toying with the idea of migrating this blog to a static site to simplify its maintenance for some time. While WordPress is a great tool, this blog is a side project and any time I have to spend maintaining WordPress gets deducted from the time I have to write for the blog. Keep in mind that I'm self-hosting this blog and it's actually running on a Linux VM that only handles the blog.Warning signs of a failing outsourcer

Allan Kelly from Allan Kelly Associates

It is 2021 and unfortunately on Friday I felt the need to repost “Dear Customer, The Truth about IT“. Little has changed in the 10 years since I wrote the original – if I was writing it today probably the only thing I would change is “IT”, I’d write “Digital” (I should probably also change Manchester United but …).

Unfortunately the vast majority of supplier’s are engaged on the basis of their marketing materials, sales pitch and promises. This tells you nothing about their actual ability to deliver working software. The suppliers can all hire great marketing people and use the same words. They can hire and incentivise the best sales people, and they can all take you out for a good meal, a round of golf or to a strip-club. (O, and they can all find a few “satisfied customers” to provide a testimony.)

The only real way to know if a supplier can deliver is to see them in action. So how can you tell things might be going wrong? What are the warning signs?

With help from Mike Burrows and John Clapham I’ve came up with this list of early warning signs. We were thinking in the context of a client-supplier (outsourced) relationship but many of them apply if you are working with internal teams too.

Staffing

1) Supplier loads teams up with extra managers: test managers a speciality

1.1) Team members don’t make decisions and defer problems to managers: there is a manager for every problem

1.2) Offshore teams have parallel management hierarchies

1.3) Suppliers feel the need to mark all your managers with their own manager (who is then duplicated offshore)

2) Inverted staffing pyramids (few devs at the bottom, lots of managers, BAs & other non-coders above)

3) People get swapped by suppliers with little notice

3.1) Short term substitutions are made: I once saw a supplier bring in a temporary SAP HR consultant to cover the usual consultant’s 2-week holiday. There was no way the substitute could come up to speed in that time let alone contribute positively.

3.2) People bait & switch: the people you meet first met didn’t last long, they were substituted for inexperienced people

3.3) “I can do that†– you get people new to their role, you get who they have available, people with experience in one role fill another role; a project manager plays coach, a delivery manager plays scrum master

4) Part time assignees (particularly managers): work a few hours a week on the project, see 1.1.

Get ready

5) Long running “set up†phases

5.1) You spend longer pondering the future than the time it takes to create the future

5.2) A lot of time is spent agonising about infrastructure changes rather than just doing them

5.3) Team advocates for, and does, investment in infrastructure and “reusable code†before anything is usable is actually delivered

Reporting not delivering

6) Supplier does not deliver working software

7) Supplier does not deliver working software every two weeks

In 2021 delivering working software to production every two weeks, or at least usable, potentially releasable software, is table stakes. The best teams deliver multiple times a day. If the supplier can’t deliver something by the end of week 4 you have a second rate supplier. Get out now.

8) Reporting hours done rather than demonstrating working software and stories

9) “Watermelon report†Green on the outside when everything inside is Red; impressive looking reports which don’t distract from the fact that nothing, or very little, was actually complete

9.1) Claiming stuff is done when it hasn’t finished testing

9.2) A Definition of Done which leaves work not-done – Mike has a good post at agendashift.com/done.

Other warning signs

10) You invest as much time in their org design as your own, if this starts to include people performance monitoring and management what are you gaining over using your own people?

11) Suppliers always say yes: no push back and no negotiation, feedback and scrutiny of your requests are signs they are paying attention to your needs. It you ask for the impossible it is better the supplier tells you so than accepts what you ask for. Ideally you want a supplier who can highlight the difficulties with your suggestion and work with you to achieve something akin to what you want even if you have to rethink your request.

12) Your own people are disenfranchised/disgruntled/frustrated by the arrangement. Particularly noticeable where people are expected to work in a different time zone to suit the other partly and when outsourcer staff are elevated (faster, smarter, etc) over the existing people.

In most of these cases the supplier is working around their own constraints rather than putting your needs first.

Subscribe to my blog newsletter and download Project Myopia for Free

The post Warning signs of a failing outsourcer appeared first on Allan Kelly Associates.

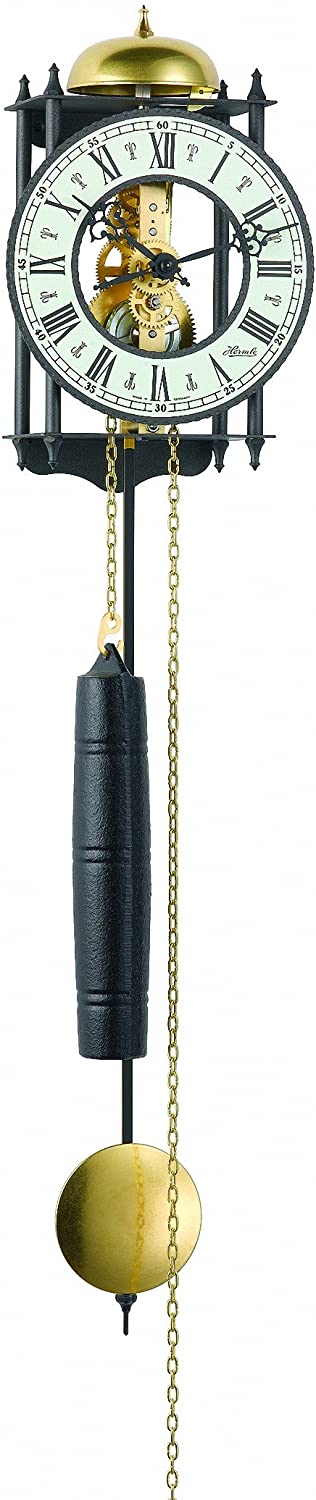

My new kitchen clock

Derek Jones from The Shape of Code

After several decades of keeping up with the time, since November my kitchen clock has only been showing the correct time every 12-hours. Before I got to buy a new one, I was asked what I wanted to Christmas, and there was money to spend

Guess what Santa left for me:

The Hermle Ravensburg is a mechanical clock, driven by the pull of gravity on a cylindrical 1kg of Iron (I assume).

Setup requires installing the energy source (i.e., hang the cylinder on one end of a chain), attach clock to a wall where there is enough distance for the cylinder to slowly ‘fall’, set the time, add energy (i.e., pull the chain so the cylinder is at maximum height), and set the pendulum swinging.

The chain is long enough for eight days of running. However, for the clock to be visible from outside my kitchen I had to place it over a shelf, and running time is limited to 2.5 days before energy has to be added.

The swinging pendulum provides the reference beat for the running of the clock. The cycle time of a pendulum swing is proportional to the square root of the distance of the center of mass from the pivot point. There is an adjustment ring for fine-tuning the swing time (just visible below the circular gold disc of the pendulum).

I used my knowledge of physics to wind the center of mass closer to the pivot to reduce the swing time slightly, overlooking the fact that the thread on the adjustment ring moved a smaller bar running through its center (which moved in the opposite direction when I screwed the ring towards the pivot). Physics+mechanical knowledge got it right on the next iteration.

I have had the clock running 1-second per hour too slow, and 1-second per hour too fast. Current thinking is that the pendulum is being slowed slightly when the cylinder passes on its slow fall (by increased air resistance). Yes dear reader, I have not been resetting the initial conditions before making a calibration run

What else remains to learn, before summer heat has to be adjusted for?

While the clock face and hands may be great for attracting buyers, it has serious usability issues when it comes to telling the time. It is difficult to tell the time without paying more attention than normal; without being a few feet of the clock it is not possible to tell the time by just glancing at it. The see though nature of the face, the black-on-black of the end of the hour/minute hands, and the extension of the minute hand in the opposite direction all combine to really confuse the viewer.

A wire cutter solved the minute hand extension issue, and yellow fluorescent paint solved the black-on-black issue. Ravensburg clock with improved user interface, framed by faded paint of its predecessor below:

There is a discrete ting at the end of every hour. This could be slightly louder, and I plan to add some weight to the bell hammer. Had the bell been attached slightly off center, fine volume adjustment would have been possible.

Limiting the number of open sockets in a tokio-based TCP listener

Andy Balaam from Andy Balaam's Blog

I learned quite a bit today about how to think about concurrency in Rust. I was trying to use a Semaphore to limit how many open sockets my TCP listener allowed, and I had real trouble making it work. It either didn’t actually work, allowing any number of clients to connect, or the compiler told me I couldn’t do what I wanted to do, because the lifetime of my Semaphore was not 'static. Here’s the journey I took towards working code that I think is correct (feedback welcome).

Motivation

In the tokio tutorial there is a short section entitled “Backpressure and bounded channels” (partway down the Channels page). It contains this statement:

…take care to ensure total amount of concurrency is bounded. For example, when writing a TCP accept loop, ensure that the total number of open sockets is bounded.

Obviously, when I started work on a TCP accept loop, I wanted to follow this advice.

Like many things in my journey with Rust, it was harder than I expected, and eventually enlightening.

The code

Here is a short Rust program that listens on a TCP port and accepts incoming connections.

Cargo.toml:

[package]

name = "tcp-listener-example"

version = "1.0.0"

edition = "2018"

include = ["src/"]

[dependencies]

tokio = { version = ">=1.0.1", features = ["full"] }

src/main.rs:

use tokio::io::AsyncReadExt;

use tokio::net::TcpListener;

#[tokio::main]

async fn main() {

let listener = TcpListener::bind("0.0.0.0:8080").await.unwrap();

loop {

let (mut tcp_stream, _) = listener.accept().await.unwrap();

tokio::spawn(async move {

let mut buf: [u8; 1024] = [0; 1024];

loop {

let n = tcp_stream.read(&mut buf).await.unwrap();

if n == 0 {

return;

}

print!("{}", String::from_utf8_lossy(&buf[0..n]));

}

});

}

}

This program listens on port 8080, and every time a client connects, it spawns an asynchronous task to deal with it.

If I run it with:

cargo run

It starts, and I can connect to it from multiple other processes like this:

telnet 0.0.0.0 8080

Anything I type into the telnet terminal window gets printed out in the terminal where I ran cargo run. The program works: it listens on TCP port 8080 and prints out all the messages it receives.

So what’s the problem?

The problem is that this program can be overwhelmed: if lots of processes connect to it, it will accept all the connections, and eventually run out of sockets. This might prevent other things working right on the computer, or it might crash our program, or something else. We need some kind of sensible limit, as the tokio tutorial mentions.

So how do we limit the number of people allowed to connect at the same time?

Just use a semaphore, dummy

A semaphore does exactly what we need here – it keeps a count of how many people are doing something, and prevents that number getting too big. So all we need to do is restrict the number of clients that we allow to connect using a semaphore.

Here was my first attempt:

use tokio::io::AsyncReadExt; use tokio::net::TcpListener; use tokio::sync::Semaphore; #[tokio::main] async fn main() { let listener = TcpListener::bind("0.0.0.0:8080").await.unwrap(); let sem = Semaphore::new(2); loop { let (mut tcp_stream, _) = listener.accept().await.unwrap(); // Don't copy this code: it doesn't work let aq = sem.try_acquire(); if let Ok(_guard) = aq { tokio::spawn(async move { let mut buf: [u8; 1024] = [0; 1024]; loop { let n = tcp_stream.read(&mut buf).await.unwrap(); if n == 0 { return; } print!("{}", String::from_utf8_lossy(&buf[0..n])); } }); } else { println!("Rejecting client: too many open sockets"); } } }

This compiles fine, but it doesn’t do anything! Even though we called Semaphore::new with an argument of 2, intending to allow only 2 clients to connect, in fact I can still connect more times than that. It looks like our code changes had no effect at all.

What we were hoping to happen was that every time a client connected, we created _guard, which is a SemaphoreGuard, that occupies one of the slots in the semaphore. We were expecting that guard to live until the client disconnects, at which point the slot will be released.

Why doesn’t it work? It’s easy to understand when you think about what tokio::spawn does. It creates a task and asks for it to be executed in the future, but it doesn’t actually run it. So tokio::spawn returns immediately, and _guard is dropped, before the code that handles the request is executed. So, obviously, our change doesn’t actually restrict how many requests are being handled because the semaphore slot is freed up before the request is processed.

Just hold the guard for longer, dummy

So, let’s hold on to the SemaphoreGuard for longer:

use tokio::io::AsyncReadExt; use tokio::net::TcpListener; use tokio::sync::Semaphore; #[tokio::main] async fn main() { let listener = TcpListener::bind("0.0.0.0:8080").await.unwrap(); let sem = Semaphore::new(2); loop { let (mut tcp_stream, _) = listener.accept().await.unwrap(); let aq = sem.try_acquire(); if let Ok(guard) = aq { tokio::spawn(async move { let mut buf: [u8; 1024] = [0; 1024]; loop { let n = tcp_stream.read(&mut buf).await.unwrap(); if n == 0 { drop(guard); return; } print!("{}", String::from_utf8_lossy(&buf[0..n])); } }); } else { println!("Rejecting client: too many open sockets"); } } }

The idea is to pass the SemaphoreGuard object into the code that actually deals with the client request. The way I’ve attempted that is by referring to guard somewhere within the async move closure. What I’ve actually done is tell it to drop guard when we are finished with the request, but actually any mention of that variable within the closure would have been enough to tell the compiler we want to move it in, and only drop it when we are done.

It all sounds reasonable, but actually this code doesn’t compile. Here’s the error I get:

error[E0597]: `sem` does not live long enough --> src/main.rs:12:18 | 12 | let aq = sem.try_acquire(); | ^^^-------------- | | | borrowed value does not live long enough | argument requires that `sem` is borrowed for `'static` ... 29 | } | - `sem` dropped here while still borrowed

What the compiler is saying is that our SemaphoreGuard is referring to sem (the Semaphore object), but that the guard might live longer than the semaphore.

Why? Surely sem is held within a scope that includes the whole of the client-handling code, so it should live long enough?

No. Actually, the async move closure that we are passing to tokio::spawn is being added to a list of tasks to run in the future, so it could live much longer. The fact that we are inside an infinite loop confused me further here, but the principle still remains: whenever we make a closure like this and pass something into it, the closure must own it, or if we are borrowing it, it must live forever (which is what a 'static lifetime means).

The code above passes ownership of guard to the closure, but guard itself is referring to (borrowing) sem. This is why the compiler says that “sem is borrowed for 'static“.

Wrong things I tried

Because I didn’t understand what I was doing, I tried various other things like making sem an Arc, making guard an Arc, creating guard inside the closure, and even trying to make sem actually have 'static storage by making it a constant. (That last one didn’t work because only very simple types like numbers and strings can be constants.)

Solution: Share the Semaphore in an Arc

After what felt like too much thrashing around, I found what I think is the right answer:

use std::sync::Arc; use tokio::io::AsyncReadExt; use tokio::net::TcpListener; use tokio::sync::Semaphore; #[tokio::main] async fn main() { let listener = TcpListener::bind("0.0.0.0:8080").await.unwrap(); let sem = Arc::new(Semaphore::new(2)); loop { let (mut tcp_stream, _) = listener.accept().await.unwrap(); let sem_clone = Arc::clone(&sem); tokio::spawn(async move { let aq = sem_clone.try_acquire(); if let Ok(_guard) = aq { let mut buf: [u8; 1024] = [0; 1024]; loop { let n = tcp_stream.read(&mut buf).await.unwrap(); if n == 0 { return; } print!("{}", String::from_utf8_lossy(&buf[0..n])); } } else { println!("Rejecting client: too many open sockets"); } }); } }

This code:

- Creates a Semaphore and stores it inside an Arc, which is a reference-counting pointer that can be shared between tasks. This means it will live as long as someone holds a reference to it.

- Clones the Arc so we have a copy that can be safely moved into the async move closure. We can’t move sem in to the closure because it’s going to get used again the next time around the loop. We can move sem_clone in to the closure because it’s not used anywhere else. sem and sem_clone both refer to the same Semaphore object, so they agree on the count of clients that are connected, but they are different Arc instances, so one can be moved into the closure.

- Only aquires the SemaphoreGuard once we’re inside the closure. This way we’re not doing something difficult like borrowing a reference to something that lives outside the closure. Instead, we’re borrowing a reference via sem_clone, which is owned by the closure which we are inside, so we know it will live long enough.

It actually works! After two clients are connected, listener.accept actually opens a socket to any new client, but because we return almost immediately from the closure, we only hold it open very briefly before dropping it. This seemed preferable to refusing to open it at all, which I thought would probably leave clients hanging, waiting for a connection that might never come.

Lifetimes are cool, and tricky

Once again, I have learned a lot about what my code is really doing from the Rust compiler. I find this stuff really confusing, but hopefully by writing down my understanding in this post I have helped my current and future selves, and maybe even you, be clearer about how to share a semaphore between multiple asynchronous tasks.

It’s really fun and empowering to write code that I am reasonably confident is correct, and also works. The sense that “the compiler has my back” is strong, and I like it.

Recommendation against the use of WhatsApp in your company

Andy Balaam from Andy Balaam's Blog

Here is the email I just sent to the organisation I volunteer for. Feel free to adapt and use in your context.

Dear [organisation leaders],

Much of the tech industry (e.g. [1]) is warning against the use of WhatsApp due to its policy of collecting and sharing user information with third parties and the poor track record of its parent company (Facebook) on ethical issues (see examples [2] and [3], and many more).

The situation was made considerably worse with a recent change to the WhatsApp terms and conditions [4].

So, as your IT person I recommend not using WhatsApp for our work.

We already have an alternative available, and I would be really happy to help anyone who needs help setting it up.

[Details here of the alternative we use (Zulip) and how to use it. The simplest alternative to recommend is Signal.]

Thanks, Andy

[1] What Facebook and WhatsApp’s Data Sharing Plans Really Mean for User Privacy

[2] Facebook experimented with modifying people’s moods

[3] Facebook paid teens for total access to their phone activity

[4] If you’re a WhatsApp user, you’ll have to share your personal data

Dear customer, the truth about IT

Allan Kelly from Allan Kelly Associates

10 years on I feel the need to repost this classic letter from the IT industry to our clients.

Audio version, read by Allan Kelly.

Dear customer,

I think it’s time we in the IT industry came clean about how we charge you, why our bills are sometimes a bit higher than you might expect, and why so many IT projects result in disappointment. The truth is that when we start an IT project, we don’t know how much time and effort it will take to complete. Consequently, we don’t know how much it will cost. This may not be a message you want to hear, particularly since you are absolutely certain you know what you want.

Herein lies another truth, which I’ll try to put as politely as I can. You are, after all, a customer, and, really, I shouldn’t offend you. You know the saying “The customer is always right”? The thing is, you don’t know what you want. You may know in general terms, but the devil is in the detail – and the more detail you try to give us beforehand, the more likely your desires are to change. Each time you give us more detail, you are offering more hostages to fortune.

Software engineering expert Capers Jones believes the things you want (‘requirements’, as we like to call them) change 2% per month on average – thats close to 27% over a year once you compound changes. Personally, I’m surprised that number is so low.

Just to complicate matters, the world is uncertain. Things change, and companies go out of business. Remember Enron? Remember Lehman Brothers? Customer tastes change. Remember Cabbage Patch Kids? Fashion changes, governments change, and competitors do their best to make life hard. So, really, even if you do know absolutely what you want when you first speak to us, it is unlikely that it will stay the same for very long.

I’m afraid to say that there are people in the IT industry who will take advantage of this situation. They will smile and agree with you when you tell them what you want, right up to the point when you sign. From then on, it’s a different story; they know that changes are inevitable, and they plan to make a healthy profit from change requests and late additions at your expense.

While I’m being honest, it is true we sometimes gold-plate things. You might not need a data warehouse for your online retailer on day one. Yes, some of our engineers like to do more than what is needed, and yes, we have a vested interest in getting things added so that we can charge you more.

It is also true that you quite legitimately think of features and functionality you would like after we’ve begun. You naturally assume something is ‘in’ when we assume it is ‘out’. And, in the spirit of openness, can you honestly say that you’ve never tried to put one over on us? (Let’s not even talk about bugs right now: it just complicates everything.)

Frankly, given all this, it is touching that you have so much faith in technology to deliver. But when IT does deliver, does it deliver big. Look what it did for Bill Gates and Larry Page, or Amazon and FedEx. Isn’t it interesting that when the IT industry develops things for itself, we end up with multi-millionaires? When we develop for other people, they end up losing money.

How did we ever talk you into any of this? Well, we package this unsightly mess and try to sell it to you. To do this, we have to hide all this unpleasantness. We start with a ritual called ‘estimation’ – how much time we think the work will take. These ‘estimates’ are little better than guesses. Humans can’t estimate time. We’ve known this since at least the late ’70s, when Kahneman and Tversky described the ‘planning fallacy’ in 1979 and went on to win a Nobel Prize. Basically, humans consistently underestimate how long work will take and are overconfident in their estimates.

To make things worse, we have a bad habit we really should kick. Between estimating the work and doing the work, we usually change the team. The estimate may be made by the IT equivalent of Manchester United or the New York Yankees, but the team that actually does the work is more than likely a rag-tag bunch of coders, analysts and managers who’ve never met before.

Historical data – data about estimates, actuals, costs, etc – can help inform planning, but most companies don’t have their own data. For those that do have data, most of it is worse than useless. In fact, Capers Jones suggests that inaccurate historical data is a major cause of project failure. For example, software engineers rarely get paid overtime, so tracking systems often miss these extra hours. Indeed, some companies prohibit employees from logging more than their official hours in their systems.

So we make this guess (sorry, ‘estimate’) and double it – or we might even triple it. If the new number looks too high, we might reduce it. Once our engineers have finished massaging the number, we give it to the sales folk, who massage it some more. After all, we want you to say “yes” to the biggest sticker price we can get. That might sound awful, but remember: we could have guessed higher in the first place.

Please don’t shoot me: I’m only the messenger.

We don’t know which number is ‘right’, but to make it acceptable to you, we pretend it is certain and we take on the risk. We can only do this if the number is sufficiently padded (and, even then, we go wrong). If the risk pays off, we get a fat profit. If it doesn’t, we don’t get any profit and may take a loss. If it’s really bad, you don’t get anything and we end up in court or bust.

The alternative is that you take on the risk – and the mess – and do it yourself. Unfortunately, another sad truth is that in-house IT is generally even worse than that provided by specialists. For a software company development is a core competency – such companies live or die by their ability to deliver software, and if they are bad, they cease to trade. Evolution weeds out the poor performers. Corporate IT on the other hand rarely destroys a business – although it may damage profits. Indeed, Capers Jones’ research also suggests specialist providers are generally better than corporate IT departments.

Sales folk might be absent, but the whole estimation process is open to gaming from many other sources and for many other reasons. The bottom line: if you decide to take on the risk, you may actually increase risk.

I know this sounds like a no-win scenario. You could just sit on the fence and wait for Microsoft or Google to solve your problems with a packaged solution, but will your competitors stand still while you do? Will you still be running a business when Google produces a free version?

Beware snake oil salesmen selling off-the-shelf applications. Once people start talking about ‘customisation’ or ‘configuration’, you head down a slippery slope. Configuring a large SAP installation is not a matter of selecting Tools, Options and then ticking a box. Configuring large packages is a major software development activity, no matter what you have been told. The people who undertake the configuration might be called ‘consultants’, but they are really specialist software developers, programmers by another name.

There really isn’t a nice, simple solution to any of this. We can’t solve this problem for you. We need you, but you have to work with us. As the customer, you have to be prepared to work with us, the supplier, again and again in order to reduce the risk. Addressing risks in a timely and cost-effective manner involves business-level decisions and trade-offs. If you aren’t there to help, we can either make the decision for you (adding the risk that you disagree), or spend your time and money to address it.

You need to be prepared to accept and share the risk with us. If you aren’t prepared to take on any risk, we will charge you a lot for all the risk we take on. Sharing the risk has the effect of reducing the risk, because once the risk is shared you, the customer, are motivated to reduce risk. One of the major risks on IT projects is a lack of customer involvement. You can help with that just by staying involved.

Ultimately all risk is your risk: you are the customer, you are paying for the project one way or another. If it fails to deliver value, it is your business that will suffer. When you share risks, when you are involved closely, risks can be addressed immediately rather than being allowed to fester and grow.

Finally, you may have grand ambitious, but we need to work in small chunks. I know this may not sound very sexy, but software creation works best when small. Economies of scale don’t exist. In fact, we have diseconomies of scale, so we need to work in tiny pieces again, again and again. If you are prepared to accept these suggestions, then let’s press ‘reset’ on our relationship and talk some more.

Yours sincerely,

The IT Industry

Dear Customer was first publishing this blog nearly 10 years ago, a polished version became famous in Agile Journal (now Agile Connection) a few months later and forms the prologue to Xanpan, 2015.

Subscribe to my blog newsletter and download Project Myopia eBook for Free

The post Dear customer, the truth about IT appeared first on Allan Kelly Associates.

Likelihood of a fault experience when using the Horizon IT system

Derek Jones from The Shape of Code

It looks like the UK Post Office’s Horizon IT system is going to have a significant impact on the prosecution of cases that revolve around the reliability of software systems, at least in the UK. I have discussed the evidence illustrating the fallacy of the belief that “most computer error is either immediately detectable or results from error in the data entered into the machine.†This post discusses what can be learned about the reliability of a program after a fault experience has occurred, or alleged to have occurred in the Horizon legal proceedings.

Sub-postmasters used the Horizon IT system to handle their accounts with the Post Office. In some cases money that sub-postmasters claimed to have transferred did not appear in the Post Office account. The sub-postmasters claimed this was caused by incorrect behavior of the Horizon system, the Post Office claimed it was due to false accounting and prosecuted or fired people and sometimes sued for the ‘missing’ money (which could be in the tens of thousands of pounds); some sub-postmasters received jail time. In 2019 a class action brought by 550 sub-postmasters was settled by the Post Office, and the presiding judge has passed a file to the Director of Public Prosecutions; the Post Office may be charged with instituting and pursuing malicious prosecutions. The courts are working their way through reviewing the cases of the sub-postmasters charged.

How did the Post Office lawyers calculate the likelihood that the missing money was the result of a ‘software bug’?

Horizon trial transcript, day 1, Mr De Garr Robinson acting for the Post Office: “Over the period 2000 to 2018 the Post Office has had on average 13,650 branches. That means that over that period it has had more than 3 million sets of monthly branch accounts. It is nearly 3.1 million but let’s call it 3 million and let’s ignore the fact for the first few years branch accounts were weekly. That doesn’t matter for the purposes of this analysis. Against that background let’s take a substantial bug like the Suspense Account bug which affected 16 branches and had a mean financial impact per branch of £1,000. The chances of that bug affecting any branch is tiny. It is 16 in 3 million, or 1 in 190,000-odd.”

That 3.1 million comes from the calculation: 19-year period times 12 months per year times 13,650 branches.

If we are told that 16 events occurred, and that there are 13,650 branches and 3.1 million transactions, then the likelihood of a particular transaction being involved in one of these events is 1 in 194,512.5. If all branches have the same number of transactions, the likelihood of a particular branch being involved in one of these 16 events is 1 in 853 (13650/16 -> 853); the branch likelihood will be proportional to the number of transactions it performs (ignoring correlation between transactions).

This analysis does not tell us anything about the likelihood that 16 events will occur, and it does not tell us anything about whether these events are the result of a coding mistake or fraud.

We don’t know how many of the known 16 events are due to mistakes in the code and how many are due to fraud. Let’s ask the question: What is the likelihood of one fault experience occurring in a software system that processes a total of 3.1 million transactions (the number of branches is not really relevant)?

The reply to this question is that it is not possible to calculate an answer, because all the required information is not specified.

A software system is likely to contain some number of coding mistakes, and given the appropriate input any of these mistakes may produce a fault experience. The information needed to calculate the likelihood of one fault experience occurring is:

- the number of coding mistakes present in the software system,

- for each coding mistake, the probability that an input drawn from the distribution of input values produced by users of the software will produce a fault experience.

Outside of research projects, I don’t know of any anyone who has obtained the information needed to perform this calculation.

The Technical Appendix to Judgment (No.6) “Horizon Issues†states that there were 112 potential occurrences of the Dalmellington issue (paragraph 169), but does not list the number of transactions processed between these ‘issues’ (which would enable a likelihood to be estimated for that one coding mistake).

The analysis of the Post Office expert, Dr Worden, is incorrect in a complicated way (paragraphs 631 through 635). To ‘prove’ that the missing money was very unlikely to be the result of a ‘software bug’, Dr Worden makes a calculation that he claims is the likelihood of a particular branch experiencing a ‘bug’ (he makes the mistake of using the number of known events, not the number of unknown possible events). He overlooks the fact that while the likelihood of a particular branch experiencing an event may be small, the likelihood of any one of the branches experiencing an event is 13,630 times higher. Dr Worden’s creates complication by calculating the number of ‘bugs’ that would have to exist for there to be a 1 in 10 chance of a particular branch experiencing an event (his answer is 50,000), and then points out that 50,000 is such a large number it could not be true.

As an analogy, let’s consider the UK National Lottery, where the chance of winning the Thunderball jackpot is roughly 1 in 8-million per ticket purchased. Let’s say that I bought a ticket and won this week’s jackpot. Using Dr Worden’s argument, the lottery could claim that my chance of winning was so low (1 in 8-million) that I must have created a counterfeit ticket; they could even say that because I did not buy 0.8 million tickets, I did not have a reasonable chance of winning, i.e., a 1 in 10 chance. My chance of winning from one ticket is the same as everybody else who buys one ticket, i.e., 1 in 8-million. If millions of tickets are bought, it is very likely that one of them will win each week. If only, say, 13,650 tickets are bought each week, the likelihood of anybody winning in any week is very low, but eventually somebody will win (perhaps after many years).

The difference between the likelihood of winning the Thunderball jackpot and the likelihood of a Horizon fault experience is that we have enough information to calculate one, but not the other.

The analysis by the defence team produced different numbers, i.e., did not conclude that there was not enough information to perform the calculation.

Is there any way that the information needed to calculate the likelihood of a fault experience occurring?

In theory fuzz testing could be used. In practice this is probably completely impractical. Horizon is a data driven system, and so a copy of the database would need to be used, along with a copy of all the Horizon software. Where is the computer needed to run this software+database? Yes, use of the Post Office computer system would be needed, along with all the necessary passwords.

Perhaps if we wait long enough, a judge will require that one party make all the software+database+computer+passwords available to the other party.

My story, my why

AllanAdmin from Allan Kelly Associates

I thought I’d open 2021 year with a personal story of how I got where I am today (no, I’m not in San Francisco, although that is the Golden Gate in the picture)

I started programming when I was 12 (ZX81 then BBC) and then led a very successful career into my 30s – including a spell in California. Increasingly I found the code was not the challenge, I could make the code do what I wanted. The problem was the way we were managed, or mismanaged, the things we were ask to do and the way we were organised.

So began my journey into “management”. Determined to be a better manager than those I had worked for I took myself back to school. During a year in business school I learned a lot of good stuff, I discovered “organisational learning” and I reconnected with my dyslexia.

“Agile” was just breaking at the time and in agile I saw the same ethos of learning I was getting so excited about. The reports of agile teams I read described the best aspects of the developments I had worked on. For me, managing software delivery and enhancing agile are the same thing.

My mission became to help my younger self: help technologists deliver successful products and enjoy satisfying work. Most of what I do falls under the “agile” banner but really it is about creating the processes and environments were people can learning, thrive and excel.

When people are getting satisfaction from their work delivering great products, businesses succeed and grow. And as software has come to underpin every digital initiative my work has expanded.

For my latest blog posts, give aways and special offers on books and training subscrbe to my newsletter – and as a thank you download my Project Myopia eBook for free

The post My story, my why appeared first on Allan Kelly Associates.

Streaming video with Owncast on a free Oracle Cloud computer

Andy Balaam from Andy Balaam's Blog

I just streamed about 40 minutes of me playing Trials Fusion using Owncast. Owncast is a self-hosted alternative to streaming services like Twitch and YouTube live.

Normally, you would need to pay for a computer to self-host it on. Owncast suggest this will cost about $5/month.

But, Oracle Cloud has a “Always Free” tier that includes a “Compute Instance” (a virtual machine running Linux) that is capable of running Owncast.

Here’s how I did it:

Register for Oracle Cloud

This was probably the worst bit.

I went to oraclecloud.com and clicked “Sign up for free cloud tier”. It didn’t work in Firefox(!) so I had to use Chromium.

I had to enter my name, address, email address, phone number and credit card details. The email was verified, the phone number was verified (with a text message), and the credit card was verified (with a real transaction), so there was no getting around any of it.

They promise that they won’t charge my card. I’ll let you know if I discover differently.

Create a Compute Instance

Once I was logged in to the Oracle “console” (web site), I clicked the burger menu in the top left, chose “Compute” and then “Instances” to create a new instance. I followed all the default settings (including using the default “image”, which meant my instance was running Oracle Linux, which I think is similar to Red Hat), and when I got to the ssh keys part, I supplied the public key of my existing SSH key pair. Read the docs there if you don’t have one of these.

As soon as that was done, and I waited for the instance to be created and started, I was able to SSH in to my instance using a username of opc and the Public IP Address listed:

ssh opc@PUBLIC_IP

(Note: here and below, if I say “PUBLIC_IP”, I mean the IP address listed in the information about your compute instance. It should be a list of four numbers separated by dots.)

Allow connecting to the instance on different ports

Owncast listens for HTTP connections on port 8080, and RTMP streams on 1935, so I needed to do two things to make that work.

Modify the Security List to add Ingress Rules

- On the information about my instance, I clicked on the name of the Subnet (under Primary VNIC).

- In the subnet, I clicked the name of the Security List (“Default Security List for …”) in the Security Lists list.

- In the Security List I clicked Add Ingress Rules and entered:

Stateless: unchecked Source Type: CIDR Source CIDR: 0.0.0.0/0 IP Protocol: TCP Source Port Range: (blank) Destination Port Range: 8080 Description: (blank)

and then clicked Add Ingress Rules to create the rule.

- I then added another Ingress Rule that was identical, except Destination Port Range was 1935.

Allow ports 8080 and 1935 on the instance’s own firewall

It took me a long time to figure out, but it turns out the Oracle Linux running on the Compute Instance has its own firewall. Eventually, thanks to a blog post by meinside: When Oracle Cloud’s Ubuntu instance doesn’t accept connections to ports other than 22, and some Oracle docs on ways to secure resources, I found that I needed to SSH in to the machine (like I showed above) and run these commands:

sudo firewall-cmd --zone=public --permanent --add-port=8080/tcp sudo firewall-cmd --zone=public --permanent --add-port=1935/tcp sudo firewall-cmd --reload

Now I was able to connect to the services I ran on the machine on those ports.

Install Owncast

The Owncast install was incredibly easy. I just followed the instructions at Owncast Quickstart. I SSHd in to the instance as before, and ran:

curl -s https://owncast.online/install.sh | bash

and then edited the file owncast/config.yaml to have a custom stream key in it. You can do that by typing:

nano owncast/config.yaml

There is information about this file at: owncast.online/docs/configuration.

Run Owncast

I ran the service like this:

cd owncast ./owncast

In future, if I want to leave it running, I may run it inside screen, or even use systemd or similar.

Open the web site

I could now see the web site by typing this into my browser’s address bar:

http://PUBLIC_IP:8080

(Where PUBLIC_IP is the Public IP copied from the Instance info as before.)

Stream some video

Finally, in OBS‘s Settings I chose the Stream section and entered:

Service: Custom... Server: rtmp://PUBLIC_IP/live Stream key: STREAM_KEY

Where “STREAM_KEY” means the stream key I added to config.yaml earlier.

Now, when I clicked “Start Streaming” in OBS, my stream appeared on the web site!

Costs and limits

Oracle stated during sign-up that I would not be charged unless I explicitly chose to use a different tier.

The Compute Instance is part of the “Always Free” tier, so in theory it should stay up and working.

However, if you use lots of resources (which streaming for a long time probably does), I would expect services would be throttled and/or stopped completely. I have no idea whether they will allow enough resources for regular streaming, or whether this is all waste of time. We shall see.

Pinephone update

Andy Balaam from Andy Balaam's Blog

I got a Pinephone for Christmas!

Here is quick summary of my experience with it. (Originally published on mastodon.)

Update on the pinephone as promised.

I love it, but I would definitely not recommend expecting to use it as your actual phone.

I have the Manjaro Phosh edition. Phosh is GNOME customised for mobile.

It turns on, you can unlock it, and you get a launcher. It has apps, and some of them work.

Firefox works really well. I can use it for Youtube and loads of other sites. I installed uBlock Origin, and it works.

Adding my Nextcloud config to Phosh seamlessly gave me Calendar, Contact and TODO list apps working, with my data in them.

The Maps app found me easily via GPS. I could bring up directions by entering a from and to, but it didn't seem to want to guide me via GPS.

Several apps don't fit properly on screen, and there doesn't seem to be a way to scroll or move the windows.

The camera technically works but the picture looks terrible (squashed, wibbly and blue-coloured).

Scrolling around on the launcher updates at about 5-10 fps, which is fine but would put many people off.

Many of the apps available to install in the Software app don't really work. I assume the list of apps is the standard for GNOME or Manjaro, so many are not adapted for phones.

I _love_ the fact that all the work that has been put into desktop Linux can be re-used on phones. Why wasn't it always this way?

It's great to be able to buy hardware that is specifically designed to run properly free software.

The Terminal app works nicely and presents a keyboard with extra keys that you need in a terminal.

The settings app works nicely.

My biggest frustration was not being able to find software in the Software app that worked nicely.

I was looking for a Youtube app that protected my privacy. On Android I use NewPipe Legacy. On desktop I use Freetube. I couldn't find Freetube in Software. I tried Minitube but it was unusable (window didn't fit).

I haven't tried installing software from the command line. Maybe I can find (or build) Freetube via a Manjaro repo?

Or maybe I should investigate NewPipe Legacy via anbox, although that seems to miss the point a little :-)