Chris Oldwood from The OldWood Thing

Naming is hard, as we know from the old adage about the two hardest problems in Computer Science, and naming in tests is no different. I’ve documented my own journey around how I structure tests in two previous posts: “Unit Testing Evolution Part II – Naming Conventions†and “Other Test Naming Conventionsâ€. I’ve also covered some similar ground before quite recently in “Overly Prescriptive Tests†but that was more about the content of the tests themselves, whereas here I’m trying to focus more on the language aspects.

Describing the Example

Something which I’ve observed, both from reviewing Fizz Buzz submissions with tests [1] and from real tests, is that there is often that missing leap from writing a test which describes a single example to generalising the language to describe the effective behaviour [2]. For example, imagine you’re writing a unit test for a calculator, if you literally encode your example as your test name you might write:

[Test]

public void two_plus_two_is_equal_to_four()

Given that you could accidentally implement it with multiplication and still make the test pass you might add another scenario to be sure you don’t fall into that trap:

[Test]

public void three_plus_seven_is_equal_to_ten() The problem with these test names is that they only tell you about the specific scenario covered by the test, not about the bigger picture. One potential refactoring might be to parameterise the test thereby forcing you to generalise the name:

[TestCase(2, 2, 4)]

[TestCase(3, 7, 10)]

public void adding_two_numbers_together_returns_their_sum(. . .)

One way this often shows up in FizzBuzz tests is with examples for the various rules, e.g.

[Test]

public void three_returns_the_word_fizz()

[Test]

public void five_returns_the_word_buzz()

The rules of a basic calculator are already known to pretty much everyone but here, unless you know the rules of the game Fizz Buzz, you would not be able to derive them from these examples alone and one very important role of tests are to document, nay specify, the behaviour of our code.

Describing the Behaviour

Hence to encode the rules you need to think more generally:

a_number_divisible_by_three_returns_the_word_fizz

There are a couple of issues here, namely that technically any number is divisible by three (just not wholly), and also that it won’t be true once we start bringing in the more advanced rules. It’s not easy trying to be precise and yet also somewhat vague at the same time, but we can try:

a_number_wholly_divisible_by_three_generally_returns_the_word_fizz

Once we bring in the “divisible by three and divisible by five†rule it becomes much harder to be precise in our test names as we’d have to include the overriding rules too which likely makes them harder to read and comprehend:

a_number_wholly_divisible_by_three_but_not_also_wholly_divisible_by_five_returns_the_word_fizz

You might just get away with it this time but its not really scalable and test names, much like code comments, often have a habit of getting out of sync with reality. Even when they break due to new functionality it’s easy to end up fixing the test and forgetting to check whether the “documentation†aspect still reflects the new behaviour.

Hence I personally prefer to use words in test names that suggest “broad strokes†when necessary and guide the reader (top to bottom) from the more general scenarios to the more specific. This, in my mind, is similar to putting the happy path cases before the various error handling ones.

Validating Collections

These examples might be a little too trivial but the impetus for this post came from similar scenarios where the test language talked about the outcome of the example itself rather than the behaviour of the logic in general. The knock-on effect of doing this, apart from making the intent of the example harder to comprehend in the future, was that it also became brittle as the specific scenario outcome was encoded in the test and any change in logic that might be orthogonal to it could break it unnecessarily. (As mentioned earlier, “Overly Prescriptive Tests†looks at brittle tests from a different angle.)

A common place where this shows up is when asserting behaviours around collections. For example imagine writing tests for querying the seats available in a cinema where there are seats in different price bands. When testing the “seat query†method for an exhausted price band you might be inclined to write:

[TestFixture]

public class when_querying_for_seats_and_none_left_in_band

{

[Test]

public void then_the_result_is_empty()

{

auditorium.Add(“Posh Seatsâ€, new Seats[0]);

var seats = auditorium.FindAvailableSeats();

Assert.That(seats, Is.Empty);

}

}

The example, being minimal in nature, means that technically in this scenario the result will be empty. However that is an artefact of the way the example is expressed and the test has been written. If I were to change the test set-up and add the following line, the test would break:

auditorium.Add(“Cheap Seatsâ€, new Seats[100]);

While the outcome of the example above might be “emptyâ€, that is not the general behaviour of the logic under test and our test language should be changed to describe that:

[Test]

public void then_no_seats_in_that_band_are_returned()

Now we’re not making a statement about what else might or might not be in that result, only what our expectations are for seats in the band in question. Once we have fixed the test language we can address how we validate that in the example. Instead of looking at what is in the collection we should be looking at what isn’t there as the test name tells us to expect that something should be absent, and the assert should reflect that language:

Assert.That(seats.Where(s => s.Band == “Posh Seatsâ€), Is.Empty);

Now I should only be able to break this test by changing the data or logic specific to the example, orthogonal behaviours should not break it by accident. (See “Manual Mutation Testing†for more on how you can test the quality of your tests.)

Invest in Tests

If you’ve ever worked on a codebase with brittle tests you’ll know how frustrating it can be when your feature mushrooms because you broke a bunch of badly written tests. If we’re lucky we see the failed assertion and if it’s not obvious then we can look back at the test name to see if the scenario rings any bells. If we’re unlucky we have to immediately reach for the debugger and likely add “refactor tests†to the yak stack.

If you “pay it forward†by taking the time to write good tests up front you’ll find it easier to sustain delivery in the future.

[1] A company I once worked for used Fizz Buzz in their candidate early screening process. Despite being overkill in practice (as was pointed out to candidates) a suite of tests was requested as part of the submission to help get a feel for what style they used. IMHO the tests said much more about candidates than the production code.

[2] Yes, “property based testing†takes this entire concept a step further so that it exercises the behaviour with multiple examples generated differently each time. That’s the destination, this post is about one possible journey.

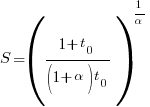

, where

, where  is the percentage of one person’s time spent communicating in a two-person team,

is the percentage of one person’s time spent communicating in a two-person team,  the number of developers and

the number of developers and  a constant greater than zero (I’m using Tausworthe’s notation).

a constant greater than zero (I’m using Tausworthe’s notation). .

. (i.e., everybody on the project has the same communications overhead), then

(i.e., everybody on the project has the same communications overhead), then  , which for small

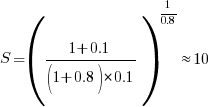

, which for small  . For example, if everybody on a team spends 10% of their time communicating with every other team member:

. For example, if everybody on a team spends 10% of their time communicating with every other team member:  .

. , then we have

, then we have  .

. , where

, where  is a constant found by fitting data from the two-person team (before any more people are added to the team).

is a constant found by fitting data from the two-person team (before any more people are added to the team). , and if

, and if  , then:

, then:  .

.