Tim Pizey from Tim Pizey

When your CI server is becoming too big to fail

This post was written when I was responsible for a heavily used CI server, for a company which is no longer trading, so the tenses may be a mixed

Once an organisation starts to use Jenkins, and starts to buy into the Continuous Integration methodology, very quickly the Continuous Integration server becomes indispensable.

The Problem

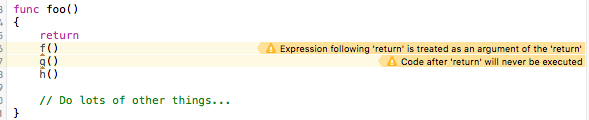

The success of Jenkins is based upon its plugin based architecture. This has enabled Kohsuke Kawaguchi to keep tight control over the core whilst allowing others to contribute plugins. This has led to rapid growth of the community and a very low bar to contributing (there are currently over 1000 plugins).

Each plugin has the ability to bring your CI server to a halt. Whilst there is a Long Term Support version of Jenkins the plugins, which supply almost all of the functionality, do not have any enforced gate keeping.

Solution Elements

A completely resilient CI service is an expensive thing to achieve. The following elements must be applied baring in mind the proportion of the risk of failure they mitigate.

Split its jobs onto multiple CI servers

Use of personal Jenkins installations is recommended, but there is still a requirement for a single, central server.

This should be a last resort, splitting tasks out across slaves achieves many of the benefits without losing a single reporting point.

Split jobs out to SSH slaves

We had a misconfiguration of our ssh slaves such that they install the Jenkins package. The only use of the package is to ensure that the jenkins user is present, though tasks should not, ideally, be run as the jenkins user.

One disadvantage of using ssh slaves is that it requires copies of the ssh keys to be manually copied from the master server to the slaves.

Because jobs are initiated from master to the slave the master cannot be restarted during a job's execution (this is currently also true for JNLP slaves, but is not necessarily so).

The main disadvantage of ssh slaves is that by referencing real slaves they make the task of creating a staging server more complex, as a simple copy of the master would initiate jobs on the real slaves.

Split jobs out to JNLP slaves

Existing ssh slave jobs should be left unchanged until they can be replaced. This is a blocker on creating a staging CI server.

This is the recommended setup, which we used eventually for most jobs.

Minimise Shared Resources

Most of these problems can be overcome by spinning up a virtual machine for each job, from scratch, provisioned by puppet via vagrant.

In addition to sharing plugins, and hence sharing faulty plugins, another way in which jobs can adversely interact is by their use of shared resources(disk space, memory, cpus) and shared services(databases, message queues, mail servers, web application servers, caches and indexes).

Run the LTS version on production CI servers

Move to LTS at the earliest opportunity.

There are two plugin feeds, one for bleeding edge, the other for LTS.

Strategies for Plugin upgrade

Hope and trust

Up until our recent problem I would have said that the Jenkins community is pretty high quality, most plugins do not break your server, your ability to predict which ones will break your installation is small so brace yourself and be ready to fix and report any problems that there are. I have run three servers for five years and not previously had a problem.

Upgrade plugins one at a time, restart server between each one.

This seems reasonable, but at a release rate of 4.3 per day, seven days a week since 2011-02-21 even your subset of plugins are going to get updated quite frequently.

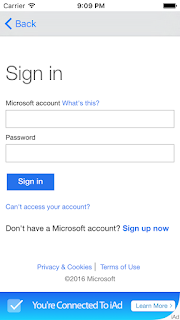

Use a staging CI server, if you can

If your CI server and its slaves are all setup using puppet, then you can clone it all, including repositories and services, so that any publishing acts do not have any impact on the real world, otherwise you will send emails and publish artefacts which interfere with your live system. Whilst we are using ssh slaves the staging server would either initiate jobs on real slaves or they too would need to be staged.

Use a partial staging CI server

Jobs which publish an artefact every time they are run cannot be re-run so are not suitable for running on a staging server.

You can prune your jobs down to those which are idempotent, ie those which do not publish and do not use ssh slaves, but the non-idempotent jobs cannot be re-run.

Control and monitor the addition of plugins

Users intending to install a plugin should ask on irc, giving the plugin url.

From the above it is clear that for a production CI server the addition of plugins is not risk or cost free.

Remove unused plugins, after consulting original installer

We still have a number of redundant plugins installed.

Plugins build up over time.

Monitor the logs

Currently there is no monitoring of the Jenkins log.

A log monitor which detects java exceptions might be used.

Backup the whole machine

Whilst the machine is backed up a fire drill is needed to prove that a state can be returned to.

Once a month restore from backup to a clean machine.

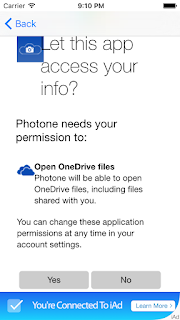

Store the configuration in Git

The configuration of Jenkins has been stored, and restored from.

This process is only one element of recreating a server. Once a month restore from git to a clean machine.