Paul Grenyer from Paul Grenyer

Join us for the process sessions at nor(DEV):con 2019 on Friday 22nd and Saturday 23rd of February in Norwich!

Get your Early Bird tickets here before they finish on Friday!

# Evolution from #NoProjects to Continuous Digital

Allan KelleyOnce upon a time there was IT, and IT departments had projects. Projects were always a bad fit for software development but somehow we made them work. As IT became Agile the damage caused by the project model became obvious and #NoProjects emerged to help teams go beyond projects.

Today growth businesses are digital. Technology is the business and the business is technology. Projects end but do you want your business to end? Or do you want it to grow? Growing a digital business means growing software technology.

In this presentation Allan Kelly will look at how #NoProjects came about, how it evolved into Continuous Digital and why it is the future of management.

Miscellaneous Process Tips

Jon Jagger

This session will explore three important process laws.

1. The New Law.

Why does nothing new ever work?

2. The Chatelier’s Principle.

How do systems change? How do they stay the same?

3. The Composition Fallacy.

No difference plus no difference equals no difference is a fallacy. Why?

Jugaad: Bringing Troubled Projects Back On Track

Giovanni Asproni

What do you do when a project is not going well—e.g., the client is upset, the team demoralized, the quality of the product is low, the project is late—to bring it back under control and make the client and the team happy again?

How do you that in highly politically charged environments?

In this talk I’ll answers the questions above and more, by sharing my experience in doing that in several projects of various sizes (from small to quite big) using some jugaad—a Hindi word, which, roughly, means thinking in a frugal way and being flexible, which, in turn, requires the ability to adapt quickly to often unforeseen situations and uncertain circumstances in an intelligent way.

I’ll describe, among other things, how to:

- Work in highly politically charged environments

- Deal with difficult (and powerful) people and speak truth to them

- Help the teams to improve their morale and motivation

- Make progress with limited resources

- Use different leadership stiles (including command and control)

- Make your client happier

- Deal with serious mistakes

Bug-First Development – Agile Software Development For User Story Prospecting

Adrian Pickering

The idea behind bug-first or bug-driven development is devilishly simply: Everything is a bug until it isn’t.

As far as a user is concerned, there is essentially no difference between a bug, a feature that hasn’t been delivered and one that is otherwise unusable, say through substandard user interface or user experience. Bug-driven development essentially asks the user what operation they want to do next that they currently can’t undertake. The benefit this brings is laser-focused story discovery and prioritisation.

Working remote vs Working colocated

Paul Boocock

We often talk about waterfall, scrum, agile and many other processes but these are often considered from a colocated perspective.

As demand for remote working continues to increase, we will discuss if our usual processes work in a remote environment and what changes or considerations do we need to make to support remote workers?

One Team, Two Teams, Many Teams: Scaling Up Done Right (90 or 45 minutes, 90 preferred)

Giovanni Asproni

Scaling up software projects is one of the trends of the moment—many companies, big and small, try to do that to increase the speed of delivery of their projects.

However, scaling up can be quite difficult (even going only from one to two teams), especially if it is done focusing on the wrong aspects – most companies give too much weight to formal structures and processes (e.g., mandating the use of SAFe, LESS or other frameworks), and not enough weight to other aspects that would give a bigger bang for the buck: eg removing friction, improving communication channels, setting clear goals, delegating responsibility and accountability, etc.

In this session I’ll share my experience in successfully helping companies to do the right thing in projects ranging from two to about eighty teams, and I’ll offer some tools that you will be able to use right away in your projects.

The session, among other things, includes:

- A description of what needs to be done right before scaling up

- Strategies on how to decide when to add new people to a team and new teams to a project

- Things to consider when deciding the structure of the teams (eg feature vs component teams), and its relationship with the shape of the system

- How to use simple rules to allow teams to collaborate productively

- An explanation on why each project has a upper bound in its ability to scale, and what to do about it

Reengineering a Library

Burkhard Kloss

Session abstract: Over the last few years, I’ve been consulting on reengineering a quant library. As is wont, the library had originally accreted, rather than been designed; eventually, it had turned into a ball of mud, and maintenance was becoming increasingly problematic. We decided to rewrite the library from scratch, using best practices as we understood them, and eventually turned it into a piece of code we can be proud of – and maintain and extend without too much pain.

This talk is a personal retrospective on techniques and processed we applied; what worked, what did not, and why.

Get your tickets now: http://nordevcon-2019.eventbrite.com

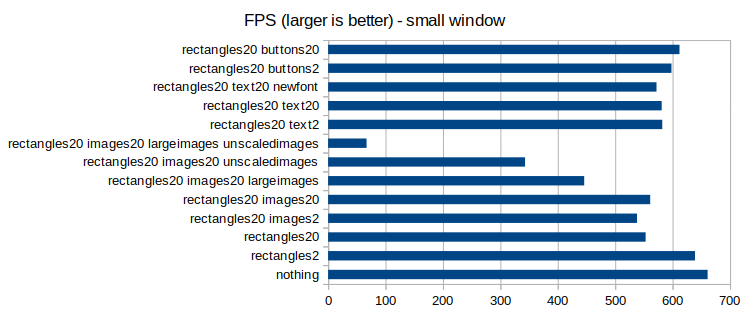

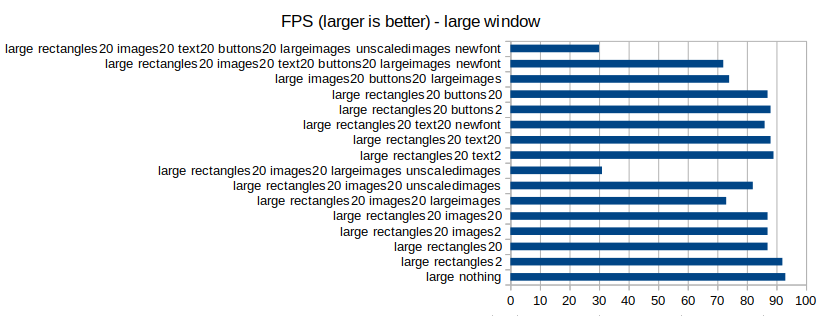

are the different headers,

are the different headers,  the different compilers and

the different compilers and  the different target languages. There might be some interaction between variables, so something more complicated was tried first; the final fitted model was (

the different target languages. There might be some interaction between variables, so something more complicated was tried first; the final fitted model was (

is a constant (the

is a constant (the