Frances Buontempo from BuontempoConsulting

CppOnSea 2019

Phil Nash organised a new conference,

CppOnSea, this year. I was lucky enough to be accepted to speak, so attended to two conference days, but not the workshops.

There were three tracks, along with a

beginners track, run by Tristan Brindle, who organises the

C++ London Uni, which is a great resource for people who want to learn C++. I'll do a brief write-up of the talks I attended.

The opening keynote was by Kate Gregory, called "Oh The Humanity!" She made us think about the words we use. For example, Foo and Bar trace back to military usage, hinting at people putting their lives on the line. Perhaps we need better names for our variable and functions. We are not fighting a war. What about one letter variable names? 'k. Nuff said. What about

errorMessage? If you call the

helpMessage instead, how does that affect your thinking?

Kate was also talking about trying to keep the code base friendly, to increase confidence:

I went to see Kevlin Henney next. I have no idea how to summarise this. He covered so many thing. What does structured programming really mean? By looking back to various uses and abuses of goto, By highlighting the structure in various code snippets, he was emphasising some styles make the structure and intent easier to see.

I saw Andreas Fertig next. Inspired by Matthew CompilerExplorer's

Godbolt, he has created

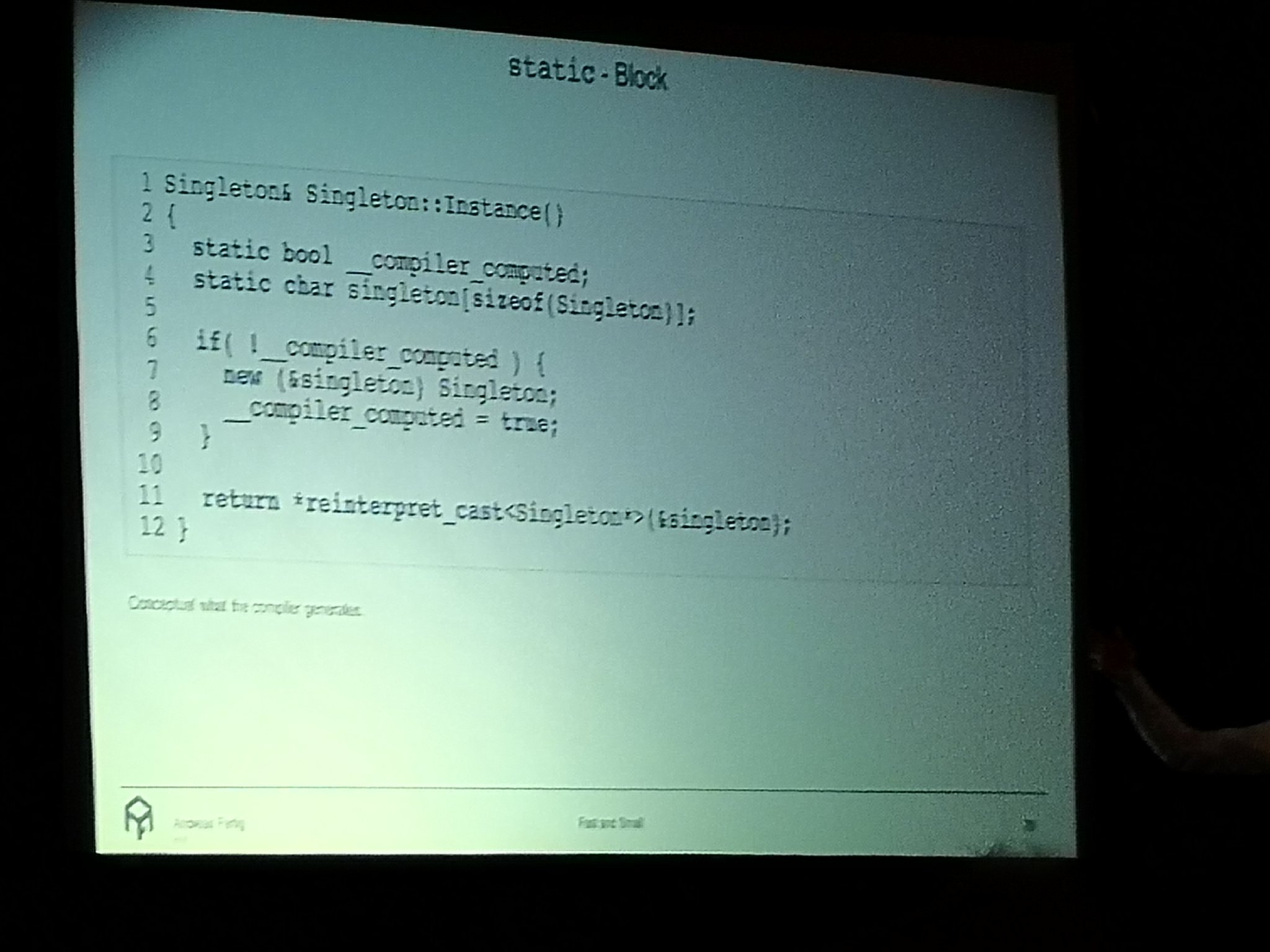

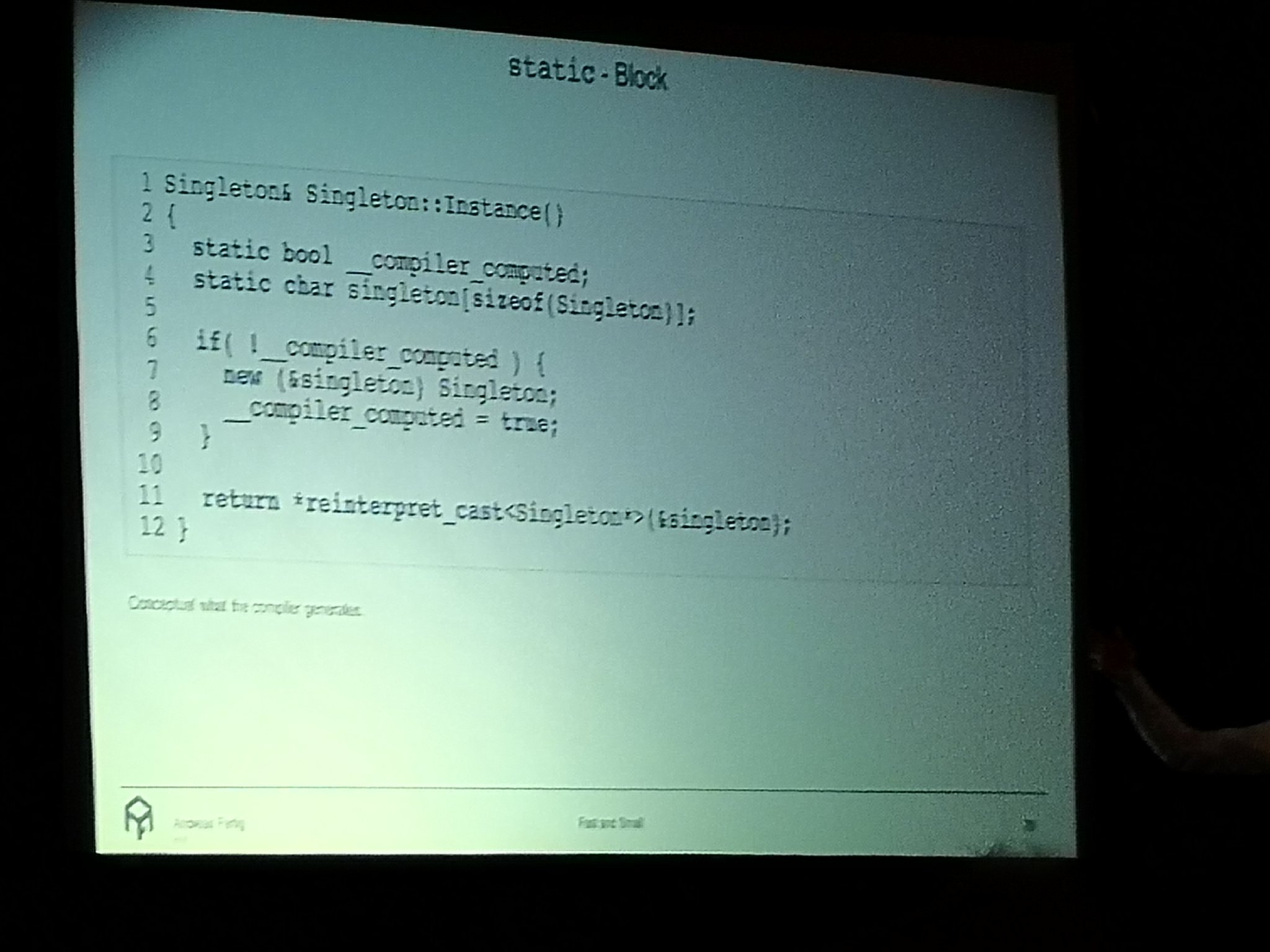

https://cppinsights.io/. Try it out. It unwraps some of the syntactic sugar, so you can see what the compiler has created for, say, a range based for loop. This can remind you where you might be creating temporaries or have references instead of copies or vice versa, without dropping down into assembly. Do you know the full horror of what might be going on inside a Singleton?

This led to an aside about statics and the double checked locking pattern. His headline point was the spirit of C++ is "you pay only for what you use", so be clear about what you are using. The point isn't that the new language features are expensive. They are often cheaper than old skool ways of going things. Just try to be clear about what's going on under the hood. Play with the insights tool.

Next up, I saw Barney Dellar, talking about strong types in C++. His

slides are probably clear enough by themselves, since they have thorough speaker notes. My main note to myself says "MIB: mishap investigation board", which amused me. He was talking about the trouble that can happen if you have doubles, or similar, for all your types, like mass and force:

double CalculateForce(double mass);It's really easy to use the wrong units or send things in in the wrong order. By creating different types, known as strong types, you can get the compiler to stop you making mistakes. Use a template with tags, you can write clear code avoiding these mistakes.

Next up was a plenary talk by Patricia Aas on Deconstructing Privilege. She's given the talk before, so you'll be able to find the slides or previous versions on YouTube. Her take is that privilege is about things that haven't happened to you. Many people get defensive if you say they have been privileged, but this way of framing the issue gives a great perspective. Loads of people turned up and listened. Maybe surprising for a serious geek C++ conference, but the presence of

https://www.includecpp.org/ ensured there were many like minded people around. If you are privileged, listen and try to help. And be careful asking intrusive questions if you meet someone different to you.

After quite a heavy, but great talk, I was "in charge" of the lightning talks. Eleven people got slots. More volunteered, but there wasn't time for every one:

Simon Brand; C++ Catastrophes: A Poem.

Odin Holmes; volatile none of the things

Paul Williams; std::pmr

Heiko Frederik Bloch; the finer points of parameter packs

Barney Dellar;imposter syndrome or mob programming

Matt Godbolt; "words of power"

Kevlin Henney; list

Neils Dekker; noexcept considered harmful???

Patricia Aas; C++ is like JavaScript

Louise Brown; The Research Software Engineer - A Growing Career Path in Academia

Denis Yaroshevskiy; A good usecase for if constexpr

My heartfelt thanks to Jim from

http://digital-medium.co.uk and Kevlin "obi wan kenobi" Henney for helping me switch between powerpoint, power point in presenter mode and the pdfs, and getting them to show on the main screen and my laptop. No body knows what was happening with the screen on the stage for the speaker. If you ever attend a conference, do volunteer to give a lightning talk. Sorry to the people we didn't have time for.

Day one done. Day two begun.First up, for me, after missing my own talk pitch, was Nico Josuttis. Don't forget his

leanpub C++17 book. It's still growing. He talked about a variety of C++17 features. The standout point for me was the mess you can get into with initialisation. He's using {} everywhere, near enough. Like

for (int i{0}; i<n; ++i)

{

}

Adding an equals can end up doing horrible things.

Much as I wanted to go see Simon Brand, Vittorio Romeo and Hana Dusikova (with slide progression by Matt Godbolt) next, I had a talk to do myself. I managed to diffuse my way out of a paper bag, while reminding us why C's rand is terrible, how useful property based testing can be, using some very simple mathematics: adding up and multiplying. This was based on a chapter of my

book, and you can download the source code from that page if you want, even if you don't buy the book. I used the

SFML to draw the diffusing green blobs. Sorry for not putting up a list of resources near the end.

I attempted to go to Guy Davidson's Linear algebra talk next, but the room was packed and I was a bit late. I heard great things about this. In particular, how important it is to design a good interface if you are making libraries.

My final choice was Clare Macrae's "

Quickly testing legacy code". This was my unexpected hidden gem of the conference. She talked about

approval testing. This compares a generated file to a gold standard file and bolts straight into googletest or Catch. It's available for several other languages. It generates the file on your first run, allowing you to get almost anything, provided it writes out a file you can compare, under test. Which then means you can start writing unit tests, if you need to change the code a bit. Changing legacy code to get it under test, without a safety hardness is dangerous. This keeps you safer. Her world involves Qt and chemical molecules visualisations. These can be saved as pngs, so she can check she hasn't broken anything. She showed how you can bolt in custom comparators, so it doesn't complain about different generated dates and does a closer than the human eye could notice RGB difference. Her code samples from the talk are

here. I've not seen this as a formal framework before. Her slides were really clear and she explained what she was up to step by step. Subsequently

twitter has been talking about this a fair bit, including adding support for Python3.

Matt "Compiler Explorer" Godbolt gave the closing keynote. Apparently, the first person Phil Nash has met who;s first conference talk was a keynote. He also had some AV issues:

If you've not encountered the

compiler explorer before, try it out. You can chose which compiler you want to point your C++ code at and see what it generates. You need a little knowledge of the "poetry" it generates. More lines doesn't mean slower code. His tl;dr; message was many people spread rumours about what's slow, for example virtual functions. Look and see what your compiler actually does, rather than stating things that were true years ago. Speculative de-virtualization is a thing. Your compiler might decide you only really have one likely virtual function you'll call so checks the address and does not then have the "overhead" it used to years ago. He also demonstrated what happened to various bit counting algos - most got immediately squashed down to one instruction, no matter how clever they looked. How many times have you been asked to count bits at interview. Spin up Godbolt and explore.This really shows you need to keep up to date with your knowledge. Something that was true ten years ago may not longer hold with new compiler versions. Measure, explore, think.

There was a lovely supportive atmosphere and a variety of speakers. People were brave enough to ask questions, and only a few people were showing off they though they new something the speaker didn't.

I'll try to back fill links to slides as I get them. Thanks to Phil for arranging this.

Did I mention I wrote a

book?