Allan Kelly from Allan Kelly Associates

To be an effective Product Owner – and that includes product managers and business analysts who are nominating work for teams to do – you need at least four things. You may well need more than these four but these are common across all teams and domains.

- 1. Skills and experience

There is more to being a Product Owner than simply writing user stories and prioritising a backlog. Yes, you need to know how to work with a development team and how to work in an Agile-style process. Yes you need to be able to write user stories and acceptance criteria, perhaps BDD style cucumbers too; yes you need to be able to manage a backlog and prioritises and partake in planning meetings.

But how do you know what should be a priority?

How do you know what will deliver value? And please customers? Satisfy stakeholders?

Importantly Product Owners need to be able to do the work behind the backlog.

Product Owners need to meet people, have the conversations, do the analysis and thinking behind those things. Any idiot can pick random items form a backlog but it takes skills and experience to maximise value.

Product Owners need to be able to identify users, segment customers, interview people, understand their needs and jobs to be done. They need to know when to run experiments and when to turn to research journals and market studies. And that might mean they need data analysis skills too.

If the product is going to sell as a commercial product you will need wider product management skills. While if your product is for internal use you need more business analysis skills. And product managers will benefit from knowing about business analysts and business analysts will benefit from knowing about product management.

You may also need specialist domain knowledge – you might need to be a subject matter expert in your own right, or you might become an SME in given time.

Some understanding of business strategy, finance, marketing, process analysis and design, user experience design and more.

Don’t underestimate the skills and experience you need to be an effective Product Owner.

- 2. Authority

At the very least a Product Owner needs the authority to nominate the work the team are going to do for the next two weeks. They need the authority to choose items form a backlog and ask the team to do them. They need the authority not to have their decisions overridden on a regular basis. (OK, it happens occasionally.)

As a general rule the more authority the Product Owner has the more effective they are going to be in their role.

The organization may confer that authority but the team need to recognise and accept it too.

I’ve seen many Product Owners who while they have the authority to nominate work for a team don’t have the authority to throw things out of the backlog. When the only way for a story to leave the backlog is for it to be developed it is very expensive. This leads to constipated backlogs that are stuffed full of worthless rubbish and where one can’t see the wood for the trees.

If the Product Owner doesn’t have sufficient authority then either they need to borrow some or there is going to be trouble.

- 3. Legitimacy

Legitimacy is different from authority. Legitimacy is about being seen as the right person, the bonafide person to exercise authority and do the background work to find out what they need to find out in order to make those decisions.

Legitimacy means the Product Owner can go and meet customers if they want. And it means that they will get their expenses paid.

Legitimacy means that nobody else is trying to fill the Product Owner role or undermine them. In particular it means the team respect the Product Owner and trust them to make the right calls. Most of all they accept that once in a while – hopefully not too often – the Product Owner will have to say “I accept technologically X is the right thing but commercially it must be Y; full ahead and damn the torpedoes.â€

It can be hard for a Product Owner to fill their role if the team believe a senior developer – or anyone else – should be managing the backlog and prioritising work to do.

- 4. Time

Finally, and probably the most difficult… Product Owners need time to do their work.

They need time to meet customers and reflect on those encounters.

They need time to work-the-backlog, value stories, weed out expired or valueless stories, think about the product vision, talk to stakeholders and more senior people, and then ponder what happens next.

Time to evaluate what has been delivered and see if it is delivering the expected value. Time to understand whether that which has been delivered is generating more or less value than expected. Time to feedback those findings into future work: to recalibrate expected values and priorities, generate more work or invalidate other work.

Product Owners need time to look at competitor products and consider alternatives – if only to steal ideas!

They need time to work with the technical team: have conversations about stories, expand on acceptance criteria, review work in progress, perhaps test completed features and socialise with the team.

They also need time to enhance their own skills and learn more about the domain.

And if they don’t have the time to do this?

Without time they will rush into planning meetings and say “I’ve been so busy, I haven’t looked at the backlog this week, just bear with me while I choose some stories…â€

More often than not they will wing-it, they substitute opinion and guesswork instead of solid analysis, facts and data. They overlook competition and fail to listen to the team and other managers.

And O yes, they need time for their own lives and family.

I sometimes think that only Super Human’s need apply for a Product Owner role, or perhaps many Product Owners are set up to fail from day-1. Yet the role is so important.

I plan to explore this topic some more in the next few posts.

The post Product Owners need 4 things appeared first on Allan Kelly Associates.

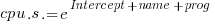

, or

, or

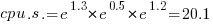

(values taken from the first column of numbers above).

(values taken from the first column of numbers above). , while for

, while for  . So

. So  , i.e., between 2.2 and 4.9 (the multiplication by 1.96 is used to give a 95% confidence interval); the

, i.e., between 2.2 and 4.9 (the multiplication by 1.96 is used to give a 95% confidence interval); the  , between 0.3 and 0.6.

, between 0.3 and 0.6.