a.k. from thus spake a.k.

In this post we shall see how we can define a smooth interpolation by connecting the points with curves rather than straight lines.

a.k. from thus spake a.k.

Derek Jones from The Shape of Code

The idea that there exists some wonderful technique or methodology, which solves one or more perceived software engineering problems, was given a name in 1986; the title of Brooks’ paper No Silver Bullet is a big clue that the author does not think it exists. Indeed, over the years a steady stream of papers have attempted to dispel the idea that silver bullets exist. These attempts have two things in common: the use of reasoning and facts to make their case, and failure to dispel the idea that there are no silver bullets in software engineering.

Now, I am a great fan of reasoning using facts, but I am also a fan of evidence driven approaches to solving problems. There is now over 30 years of evidence that reasoning using facts is not an effective means of convincing people that silver bullets don’t exist.

Belief in silver bullets will not go away until it ceases to be in some peoples’ interest for them to exist.

If you have something to sell, there is a benefit to having customers believe in silver bullets: the product/research will dramatically improve performance, time to market, costs, profitability, etc…

Belief in silver bullets is not unfounded. Computing has a 70-year history of things going faster, getting cheaper and systems doing what was once thought impossible. The press has bought into this and amazing success stories abound. Having worked on a few projects that delivered faster/cheaper/impossible systems, I know that no silver bullets were involved, just lots of hard work and sometimes being in the right place at the right time. Hard work and happenstance don’t make for feel-good headlines, and rarely get mentioned in the press.

When faced with a problem, the young and inexperienced tend to be optimists; there must be a silver bullet better way of doing this that is fast/cheap/efficient. The computing field has been evolving so rapidly that many of those involved are young and inexperienced; fertile ground for belief in silver bullets to flourish.

Consequences of a belief in silver bullets in industry include, time/cost overruns on projects and money wasted on tools that are never used. In academia a belief in silver bullets results in the pointless invention of new programming languages, methodologies, programming techniques, etc.

The belief in silver bullets will not fade away until the rate of change in computing slows to a crawl and most of those involved have gained substantial experience from which they can see that results come from hard work (and some amount of luck).

Allan Kelly from Allan Kelly Associates

Someone ask the other day: how should a organisation be designed?

There are two potential answers, which actually aren’t as contradictory as they look at first site.

The first is very simple: Don’t.

That is, don’t design your organization, don’t set out an organizational chart, don’t set out a plan and aim to restructure your organization to that plan. Rather create the conditions to let a structure emerge.

I suppose its the difference between “design” meaning “create a plan for the way you want things to be” and “design” meaning “the way things are arranged.” To differentiate them the first might be called “intentional design” and the latter “emergent design.”

That does not necessarily imply all emergent structures are good. As we see in code sometimes emergent designs are not always the best and over time they need refactoring. Which implies at some point there needs to be intentional design.

Put it like this: I’d rather your organization pulls the design rather than you push a design on the organization.

Organizational structure is itself a function of business strategy. And both need to be part emergent and part intentional. Although you might have noticed I tend towards emergent while most of the world tends towards intentional!

Thus it helps to have a reference model of how you think the organization should be, maybe something to steer the organization towards.

So the second answer to the question would be longer:

They are the main points at any rate. If you’d like to know more Continuous Digital contains a longer discussion of the topic. (Continuous Digital actually builds on Xanpan in this regard, and the (never finished) Xanpan Appendix discusses the same idea.)

Sign-up to receive these posts by e-mail and free eBook of Xanpan

The post Organizational structure in the Digital and Agile age appeared first on Allan Kelly Associates.

Allan Kelly from Allan Kelly Associates

In August I’m running a 1-day workshop in Zurich with Vasco Duarte on the bleeding edge of Agile: #NoProjects and #NoEstimates for Digital First companies.

This is a pre-conference event for the ALE 2018 conference which is happening the same week in Zurich. Everyone is welcome, you don’t need to attend the conference.

If you book in the next two weeks you get it for cheap, after July 20 the price goes up – although its still only a few hundred euros.

Book now, save money and secure your place – places are limited!

For those ho can’t get to Zurich in August I’ve got a Continuous Digital workshop of my own and a half-day management briefing. Right now you can book either of these for private in-house delivery. I’m looking at offering these as public courses here in London, if you are interested get in touch and help me fix a date.

(I have a love hate relationship with #NoProjects, I’d love to retire the name but it resonates with so many people. So I tend to use #NoProjects when I’m discussing my critique of the project model and Continuous Digital when I’m setting out my preferred alternative.)

The post #NoProject #NoEstimates workshop appeared first on Allan Kelly Associates.

Anders Schau Knatten from C++ on a Friday

I know it’s not Friday, but I couldn’t wait to share this: Yesterday I signed the contract for my new job as senior developer at Zivid Labs!

While looking for a new position this time, I talked to a lot of cool companies. Zivid stuck out as the most interesting, and I’m very happy to have been accepted into their team!

Zivid have made the world’s most accurate real-time 3D color camera. In other words, the world’s coolest eyes for robots  . The main API is in modern, clean C++, with bindings to other languages as well. My main role will be working on the C++ parts of the API, as well as other C++ systems and frameworks. This is actually my first C++ job for over five years, so you can expect me to increase activity both on this blog and on C++ conferences, both of which I really look forward to!

. The main API is in modern, clean C++, with bindings to other languages as well. My main role will be working on the C++ parts of the API, as well as other C++ systems and frameworks. This is actually my first C++ job for over five years, so you can expect me to increase activity both on this blog and on C++ conferences, both of which I really look forward to!

Why did I choose Zivid?

Now it’s time to finish paternity leave, have a few weeks of much needed vacation, then I’ll join the team on 1 October. I’m really looking forward to joining an awesome team making an awesome product!

Derek Jones from The Shape of Code

How long will it take to compile a source file?

When computers were a lot slower than they are today, this question was of general interest. Job scheduling is more effective when reliable runtime estimates are available, and developers want to know if there is enough time to get a coffee before the compile finishes.

An embarrassing fact about compile time performance, used to be that a large percentage of compile time was spent doing lexical analysis [“The cost of lexical analysis”, I cannot find an online copy]. Why was this embarrassing? Compiler writers like to boast about all the fancy optimizations their compiler does; but doing fancy stuff consumes lots of resources, so why were compilers spending so much of their time doing simple things like lexical analysis? The reality was that fancy compiler optimizations were not commercially viable until developer computers contained tens of megabytes of memory, i.e., very few pre-1990 compilers did any real optimization (people are still fussing over lexer performance).

An analysis of the data in Captain Dennis Miller’s Masters thesis (late Rome period), finds compile time is proportional to the square root of the number of tokens in the source (code+data); more complicated models are a slightly better fit. Where did square root come from? I expected a linear relationship, but would be willing to go with log. The measurements are from Ada compilers in the mid 1980s. I know several people who worked on Ada compilers during that time, and they were implementing the latest fancy optimizations (Ada was going to be the next big thing and the venture capital was flowing; big companies, with big computers were going to be paying lots of money to use Ada, but then microcomputers came along). I think that square root is driven by OS resource limitations, the compilers are using lots of memory and a noticeable amount of time is spent swapping.

So computers got a lot faster and people lost interest in estimates of how long it would take to compile individual files. I have not seen any interest in predicting how long it would take to compile whole projects (just complaints about how long it takes). There has been some work on progress indicators, updated as compilation progresses, which is a step in the right direction. Perhaps somebody has recorded compile time information and thrown machine learning at it; I usually ignore machine learning papers applied to software engineering and perhaps I have missed something. Pointers to project compile time prediction work welcome.

Then along came just-in-time compilation. Now people want to estimate how long it will take to generate machine code from some intermediate form, that is being interpreted.

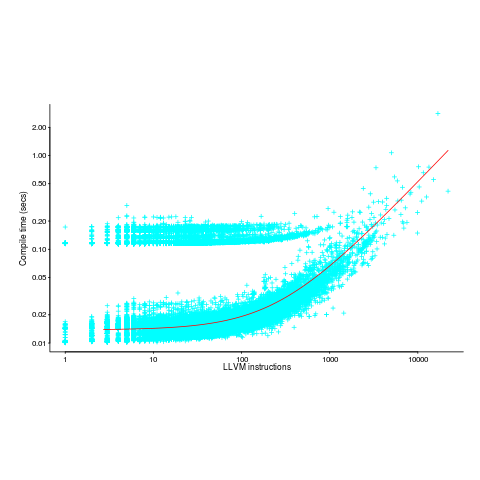

The plot below (thanks to Rafael Auler for kindly supplying the data from his paper) shows the time taken to generate code from functions containing a given number of LLVM instructions (an intermediate code), at optimization level O3. The red line is a regression fit to one of the ‘arms’ and shows constant time for less than 100’ish instructions and then a linear relationship. I have no idea why the time is roughly constant for a large number of functions.

There is a lot of variation for function containing the same number of instructions. This is to be expected when lots of different optimizations are being tried; sometimes a function will contain lots of the kind of code that a particular optimization spends lot of times process and sometimes the code will not contain anything interesting (i.e., no optimizations are found).

Paul Grenyer from Paul Grenyer

Alfredo Correa from Simplify C++!

Today I am happy to present a guest post by Alfredo Correa about covariant visitors for std::variant. Alfredo works at Lawrence Livermore National Laboratory where he uses C++ to develop […]

The post Functions of Variants are Covariant appeared first on Simplify C++!.

Derek Jones from The Shape of Code

I last looked at the research on teaching programming around 10 years ago and I have been catching up with what has been going on; in brief: same old, same old. One of the best papers on the subject is still: Language-independent conceptual “Bugs”

The research activity is still focused on making the tools and language ‘better’. There is a defining silence on the possibility that those doing the teaching could not teach their way out of a paper bag. Nobody is brave enough to suggest that teacher training might be a worthwhile investment, or that lectures oriented to what is useful (rather than what the lecturer finds interesting) would be appreciated by students.

I have always thought that researching the teaching programming had no practical purpose, other than possibly helping universities increase the number of students graduating with computing degrees (some universities are solving the problem students have with programming by offering degrees that don’t involve being able to program). I still think that teaching programming to school children is at best a waste of time.

My experience with students learning to program is from a very long time ago. The process involved listening to confusing and disjoint lectures, reading books and figuring out what worked by trial and error. Students were not taught to program, they got thrown in at the deep and were expected to survive. Anybody who could handle this stood some chance of being able to handle developing software in the ‘real world'; universities were (accidentally) graduating people with the skills industry needed. However, these days universities are supposed to be customer focused, what industry needs to irrelevant (my experience of sitting on departmental industry panels is that the head of department tells us what they are thinking of doing {i.e., new courses for which there will be lots of paying students} and we try to talk him/her out of the sillier ideas); too many fee paying students find programming too hard, let’s offer computing degrees that don’t require any programming.

Would you hire a recent graduate, for a development role, who had trouble figuring out how to fix syntax errors in their code? Surely, the minimum requirement is somebody who gets some pleasure from coding, even if they don’t want to spend lots of time doing it.

There is a shortage of software developers and flying turkeys are still with us.

Allan Kelly from Allan Kelly Associates

I’ve long worried about “Best Practicesâ€. Sure I usually play along at the time but lurking in the back of my mind, waiting for a suitable opportunity are two questions:

I was once told by someone from the oil industry that it was common for contracts to specify “best practice†should be used. But seldom was the actual practice specified. Instead each party to the contract would interpret best practice as they wished, until something went wrong. At that point, after an accident, after money was lost they would go to court and a judge would decide what was best practice.

Sure practice X might be the best know way of doing things at the moment but how much better could it be? By declaring something “best practice†you can be self limiting and potentially preventing innovation.

Now a piece in MIT Sloan Management Review (Why Best Practices Often Fall Short, Jérôme Barthélemy, February 2018) adds to the debate and highlights a few more problems.

Just for openers, sometimes people mistakenly identify the practice creating the benefits. Apparently some people looked at Pixar animation and decided that having rest rooms (toilets to us English speakers) in the centre of an office floor enhances creativity. They might do, but there is so much else happening at Pixar that moving all the toilets in your organization will probably make no difference at all.

But it is worse than that.

Adopting best practice from elsewhere does not mean it will be best practice in your environment but adopting that “best practice†will be disruptive. Think of all the money you will need to spend relocating the toilets, all the people who will be upset by a desk move they don’t want, all the lost productivity while the work is going on.

The author suggests that in some cases that disruption costs are so high the “best practice†will never cover the costs of the change. Organizations are better shunning the best practice and carrying on as they are. (ERP anyone?)

It gets worse.

There is risk in those best practices. Risk that they will cost more, risk that they won’t be implemented correctly and risk that they will backfire. What was best practice at one organization might not be best practice in yours. (Which might imply you need even more change, even more disruption at even more cost.)

In fact, some best practices – like stock options for executives – can go horrendously wrong and induce behaviours you most definitely don’t want.

So what is a poor company to do?

Well, the author suggests something that does work: copying good practices. Not best but “just OKâ€. That works. Copy the mundane stuff, the proven stuff. The costs and risks of a big change are avoided. (This sounds a bit like In Search of Mediocracy.)

In my world that means you want to be getting better at doing Agile instead of trying to leapfrog Agile and move to DevOps in one bound.

The author also suggests that where your competitive advantage is concerned keep your cards close to your chest. Do thinks yourself. Work out what your best practice is, work out how you can improve yourself.

I’ve long argued that I want teams to learn and learn for themselves rather than have change done to them. But I also want teams to steal. When they see other teams – at home or elsewhere – doing good things they should steal practices. The important thing from my point of view is for the teams to decide for themselves.

Sign-up to receive these posts by e-mail and free eBook of Xanpan

The post Best practices considered harmfull appeared first on Allan Kelly Associates.