Jon Jagger from less code, more software

is an excellent book by Yuval Noah Harari (isbn 978-0-099-59008-8)As usual I'm going to quote from a few pages.

Whereas chimpanzees spend five hours a day chewing raw food, a single hour suffices for people eating cooked food.

Since long intestines and large brains are both massive energy consumers, it's hard to have both. By shortening the intestines and decreasing their energy consumption, cooking inadvertently opened the way to the jumbo brains of Neanderthals and Sapiens.

Large numbers of strangers can cooperate successfully by believing in common myths.

There is some evidence that the size of the average Sapiens brain has actually decreased since the age of foraging. Survival in that era required superb mental abilities from everyone.

What characterises all these acts of communication is that the entities being addressed are local beings. They are not universal gods, but rather a particular deer, a particular tree, a particular stream, a particular ghost.

The extra food did not translate into a better diet or more leisure. Rather, it translated into population explosions and pampered elites.

The new agricultural tasks demanded so much time that people were forced to settle permanently next to their wheat fields.

This is the essence of the Agricultural Revolution: the ability to keep more people alive under worse conditions.

One of history's few iron laws is that luxuries tend to become necessities and to spawn new obligations.

Evolution is based on difference, not on equality.

There is not a single organ in the human body that only does the job its prototype did when it first appeared hundreds of millions of years ago.

The mere fact that Mediterranean people believed in gold would cause Indians to start believing in it as well. Even if Indians still had no real use for gold, the fact that Mediterranean people wanted it would be enough to make the Indians value it.

The first religious effect of the Agricultural Revolution was to turn plants and animals from equal members of a spiritual round table into property.

The monotheist religions expelled the gods through the front door with a lot of fanfare, only to take them back in through the side window. Christianity, for example, developed its own pantheon of saints, whose cults differed little from those of the polytheistic gods.

Level two chaos is chaos that reacts to predictions about it, and therefore can never be predicted accurately.

In many societies, more people are in danger of dying from obesity than from starvation.

Each year the US population spends more money on diets than the amount needed to feed all the hungry people in the rest of the world.

Throughout history, the upper classes always claimed to be smarter, stronger and generally better than the underclasses... With the help of new medical capabilities, the pretensions of the upper classes might soon become an objective reality.

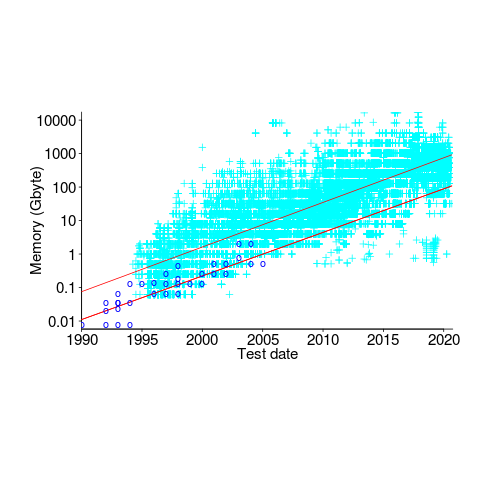

Hardware vendors are more likely to submit SPEC results for their high-end systems, than their run-of-the-mill systems. However, if we are looking at rate of growth, rather than absolute memory capacity, the results may be representative of typical customer systems.

Hardware vendors are more likely to submit SPEC results for their high-end systems, than their run-of-the-mill systems. However, if we are looking at rate of growth, rather than absolute memory capacity, the results may be representative of typical customer systems.