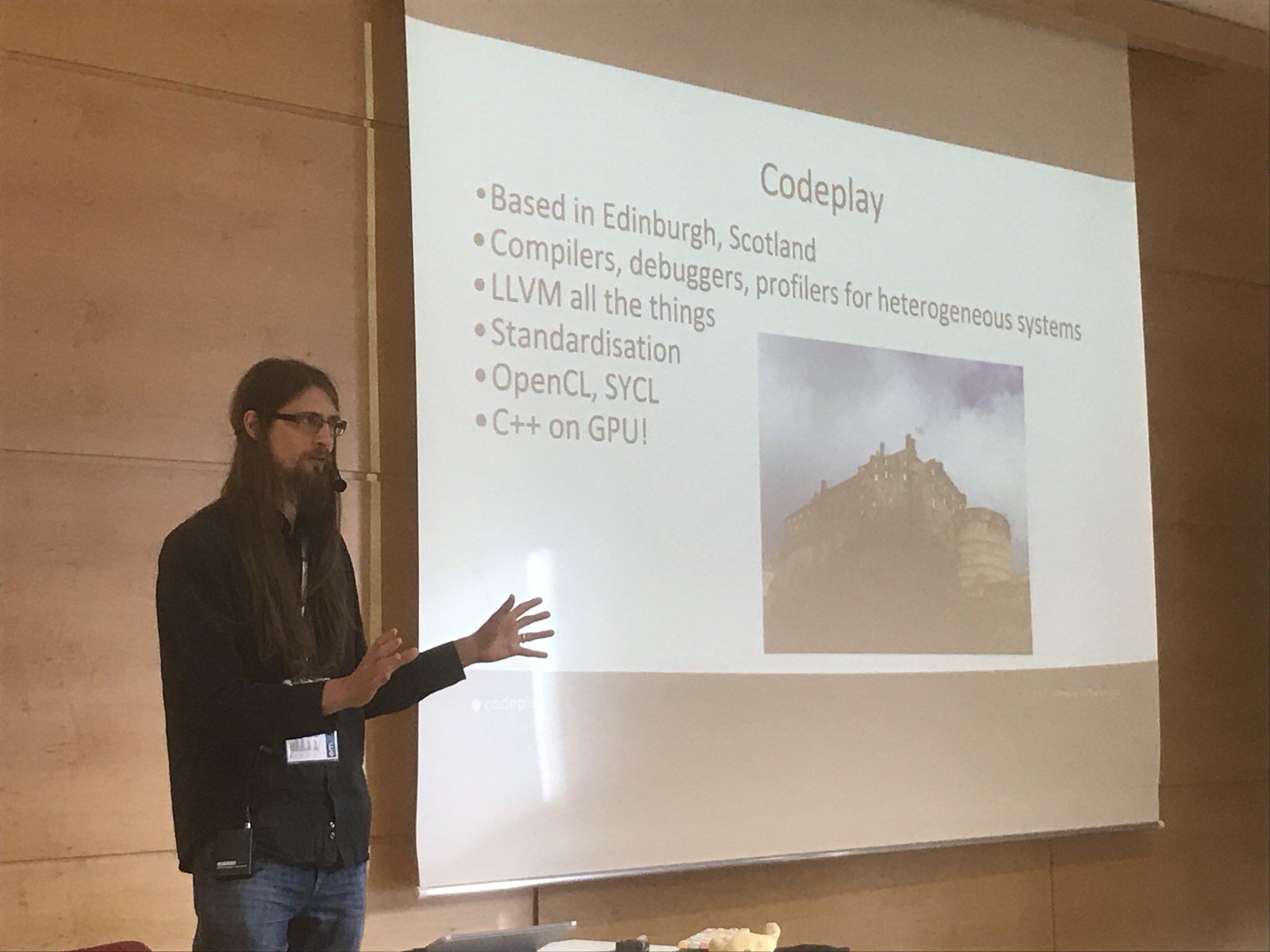

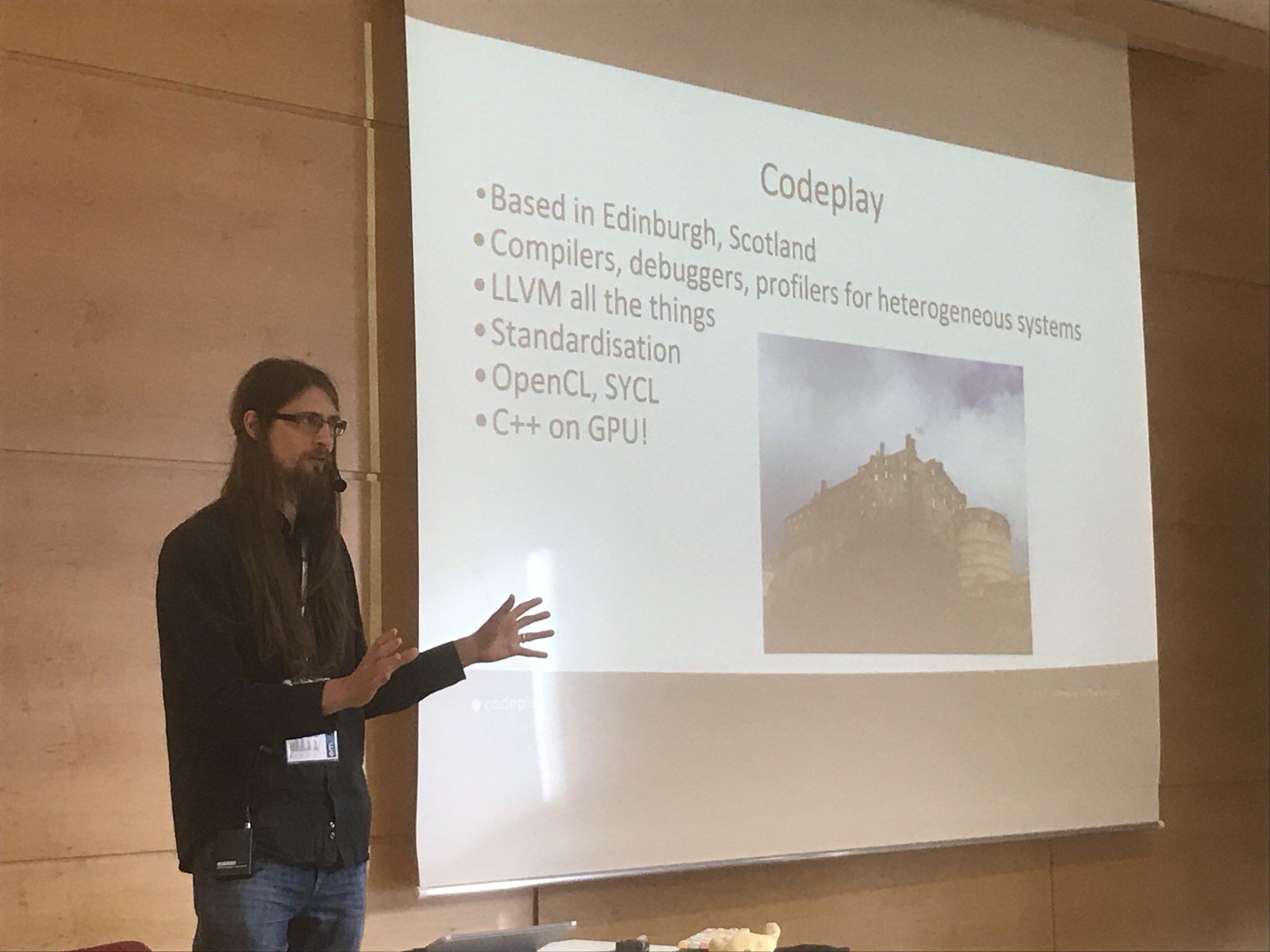

Simon Brand from Simon Brand

emBO++ is a conference focused on C++ on embedded systems in Bochum, Germany. This was it’s second year of operation, but the first that I’ve been along to. It was a great conference, so I’m writing a short report to hopefully convince more of you to attend next year!

The conference took place over four days: an evening of lightning talks and burgers, a workshop day, a day of talks (what you might call the conference proper), and finally an unofficial standards meeting for those interested in SG14. This made for a lot of variety, and each day was valuable.

Venue

One thing I really enjoyed about emBO++ was that the different tech and social events were dotted around the city. This meant that I actually got to see some of Bochum, get lost navigating the train system, walk around town at night, etc., which made a nice change from being cooped up in a hotel for a few days.

The main conference venue was at the Zentrum für IT-Sicherheit (Centre for IT Security). It was a spacious building with a lot of light and large social areas, so it suited the conference environment well. The only problem was that it used to be a military building and was lined with copper, making the thing into one huge Faraday cage. This meant that WiFi was an issue for the first few hours of the day, but it got sorted eventually.

Food and Drink

The catering at the main conference location was really excellent: a variety of tasty food with healthy options and large quantities. Even better were the selection of drinks available, which mostly consisted of interesting soft drinks which I’d never seen before, such as bottled Matcha with lime and a number of varieties of Mate. All the locations we went to for food and drinks were great – especially the speakers dinner. A lot of thought was obviously put into this area of the conference, and it showed.

Workshops

There were four workshops on the first day of the conference with two running in parallel. The two which I attended were very interesting and instructive, but I wish that they had been more hands-on.

Jörn Seger – Getting Started with Yocto

I was in two minds about attending this workshop. We need to use Yocto a little bit in my current project, so I could attend the workshop in order to gain more knowledge about it. On the other hand, I’d then be the most experienced member of my team in Yocto and would be forced to fix all the issues!

In the end I decided to go along, and it was definitely worthwhile. Previously I’d mostly muddled along without an understanding of the fundamentals of the system; this workshop provided those.

Kris Jusiak – Embedding a Compile-Time-State-Machine

Kris gave a workshop on Boost.SML, which is an embedded domain specific language (EDSL) for encoding expressive, high-performance state machines in C++. The library is very impressive, and it was valuable to see all the different use-cases it supports and how it supports switching out the frontend and backend of the system. I was particularly interested in this session as my talk the next day was on EDSLs, so it was an opportunity to steal some things to mention in my talk.

You can find Boost.SML here.

Talks

There were two tracks for most of the day, with the first and final ones being plenary sessions. There was a strong variety of talks, and I felt that my time was well-spent at all of them.

Simon Brand – Embedded DSLs for Embedded Programming

My talk! I think it went down well. I got some good engagement and questions from the audience, although not much feedback from the attendees later on in the day. I guess I’ll need to wait for it to get torn apart on YouTube.

Klemens gave an excellent talk about an ARM coroutine library which he implemented. This talk has nothing to do with the C++ Coroutines TS, instead focusing on how coroutines can be implemented in a very low-overhead manner. In Klemens’ library, the user provides some memory to be used as the stack for the coroutine, then there are small pieces of ARM assembly which perform the context switch when you suspend or resume that coroutine. The talk went into the performance considerations, implementation, and use in just the right amount of detail, so I would definitely recommend watching if you want an overview of the ideas.

The library and presentation can be found here.

Emil Fresk – The compile-time, reactive scheduler: CRECT

CRECT is a task scheduler which carries out its work at compile time, therefore almost entirely disappearing from the generated assembly. Emil’s lofty goal for the talk was to present all of the necessary concepts such that those viewing the talk would feel like they could go off and implement something similar afterwards. I think he mostly succeeded in this – although a fair amount of metaprogramming skills would be required! He showed how to use the library to specify the jobs which need to be executed, the resources which they require, and when they should be scheduled to run. After we understood the fundamentals of the library, we learned how this actually gets executed at compile-time in order to produce the final scheduled output. Highly recommended for those who work with embedded systems and want a better way of scheduling their work.

You can find CRECT here.

Ben Craig – Standardizing an OS-less subset of C++

If you watch one talk from the conference it should be this one. C++ has had a “freestanding†variant for a long time, and it’s been neglected for the same amount of time. Ben talked about all the things which should not be available in freestanding mode but are, and those which should be but are not. He presented his vision for what should be standards-mandated facilities available in freestanding C++ implementations, and a tentative path to making this a reality. Particularly of interest were the odd edge cases which I hadn’t considered. For example, it turns out that std::array has to #include <string> somewhere down the line, because my_array.at(n) can throw an exception (std::out_of_range), and that exception has a constructor which takes std::string as an argument. These tiny issues will make getting a solid standard for freestanding difficult to pin down and agree on, but I think it’s a worthy cause.

Ben’s ISO C++ paper on a freestanding standard library can be found here.

In Jacek’s team, they have many different hardware versions to support. This creates a problem of creating regressions in some versions and not others when changes are made. This talk showed how they developed the testing infrastructure to enable them to test all hardware versions they needed on each merge request to ensure that bad commits aren’t merged in to the master branch. They wrote a simple testing framework in Haskell which was fine-tuned to their use case rather than using an existing solution like Jenkins (that’s what we use at Codeplay for solving the same problem). Jacek spoke about issues they faced and the creative solutions they put in place, such as putting a light detector over the CAPS LOCK button of a keyboard and making it blink in Morse code in order to communicate out from machines with no usable ports.

Odin’s talk summed up some current major problems that are facing the embedded community, and roped together all of the talks which had come before. It was cool to see the overlap in all the talks in areas of abstraction, EDSLs, making choices at compile time, etc.

Closing

I had a great time at emBO++ and would whole-heartedly recommend attending next year. The talks should be online in the next few months, so I look forward to watching those which I didn’t attend. The conference is mostly directed at embedded C++ developers, but I think it would be valuable to anyone doing low-latency programming on non-embedded systems, or those writing C/Rust/whatever for embedded platforms.

Thank you to Marie, Odin, Paul, Stephan, and Tabea for inviting me to talk and organising these great few days!

![S_{sd-est}=sqrt{f_2 [0.25k^2 ({f_1}/{f_2} )^4+k^2 ({f_1}/{f_2} )^3+0.5k ({f_1}/{f_2} )^2 ]} S_{sd-est}=sqrt{f_2 [0.25k^2 ({f_1}/{f_2} )^4+k^2 ({f_1}/{f_2} )^3+0.5k ({f_1}/{f_2} )^2 ]}](http://shape-of-code.coding-guidelines.com/wp-content/plugins/wpmathpub/phpmathpublisher/img/math_971.5_9eaa74a0e52ef66cad3146183e24b04f.png)

is the estimated number of distinct faults,

is the estimated number of distinct faults,  the observed number of distinct faults,

the observed number of distinct faults,  the total number of faults,

the total number of faults,  the number of distinct faults that occurred once,

the number of distinct faults that occurred once,  the number of distinct faults that occurred twice,

the number of distinct faults that occurred twice,  .

.